Glidein Factory

Description

A Glidein Factory is the glidein producing infrastructure of

a glidein based WMS.

Index

Overview

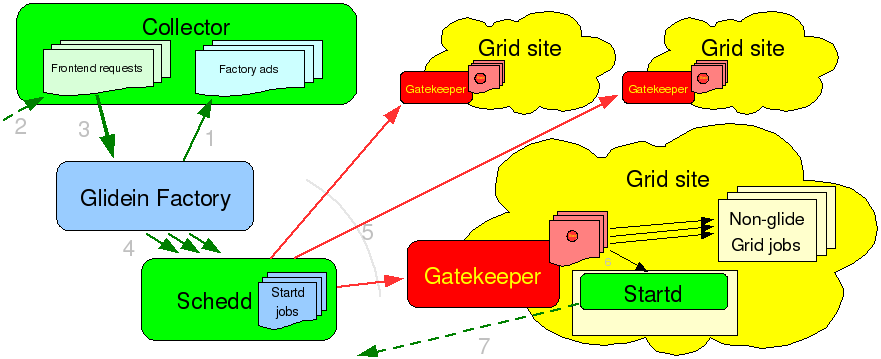

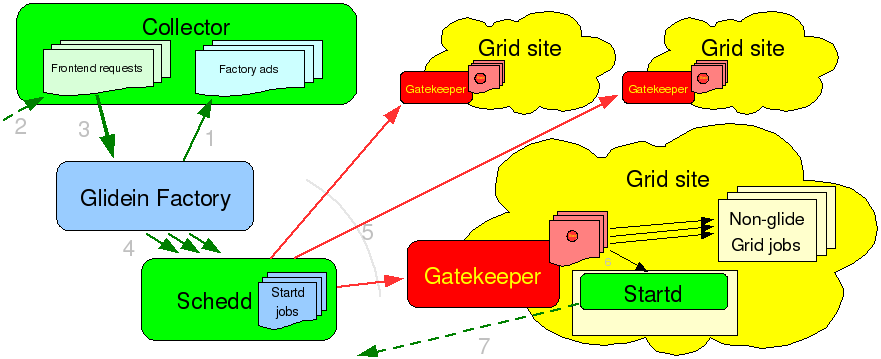

The main task of the Glidein

Factory is to advertise itself, listen for requests from frontend

clients and submit glideins.

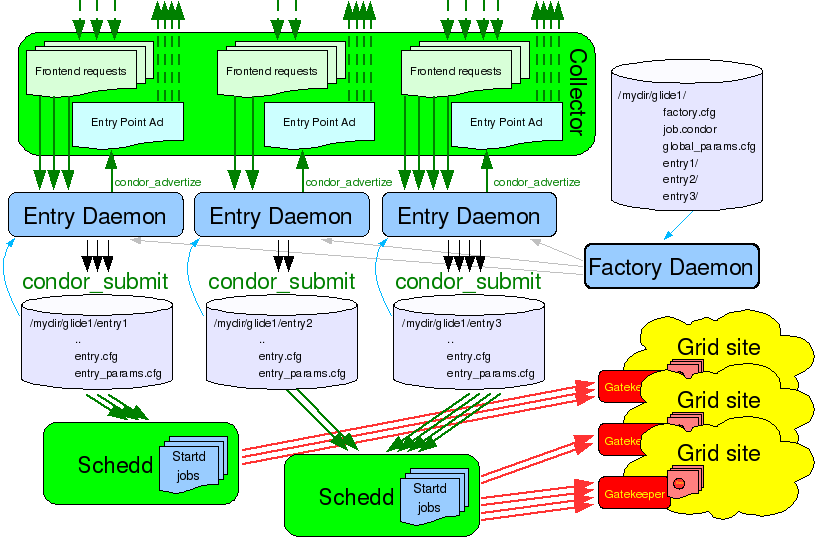

Look at the picture below for a schematic view.

A

single glidein factory can handle multiple kinds of glideins, also

called glidein entry points (as they usually point to

different Grid resources). For each entry point, it will advertise a

different class-ad.

Similarly, each request from a frontend

client will affect a single entry point; a frontend will need to

advertise several requests in order to have glideins submitted to all

the desirable resources.

The Glidein Factory philosophy

The main idea behind a Glidein Factory is to make the life of a VO

frontend as easy as possible. And to do that, the factory needs to

abstract as much as possible.

For starters, the factory

itself is the one that knows the details of various Grid sites and

properly configures the glidein entry points, so they will run

without any additional knowledge. A frontend only needs to know that

an entry point exists, and the parameters it takes.

Additionally,

the factory also does the submissions themselves. The frontend just

publishes that it needs some glideins and the factory will

start submitting them. The only handle the frontend may want to use

is the ability to regulate the rate of glideins that are sent to the

Grid site. In the current implementation, this can be achieved by

setting the desired number of idle

glideins to be kept in the Grid queue.

The typical

scenario features a frontend with several (hundreds or even

thousands of) user jobs in the queue that is looking for resources to

run them. It will fetch the factory classads, select the ones that

satisfy its needs, and advertise class-ads with requests for those

glideins. If the frontend ever runs out of user jobs, it will

advertise the fact that it does not need those glideins anymore. In

practical terms, this means asking the factory to keep exactly 0 idle

glideins in the queue.

Implementation details

The Glidein Factory Daemon

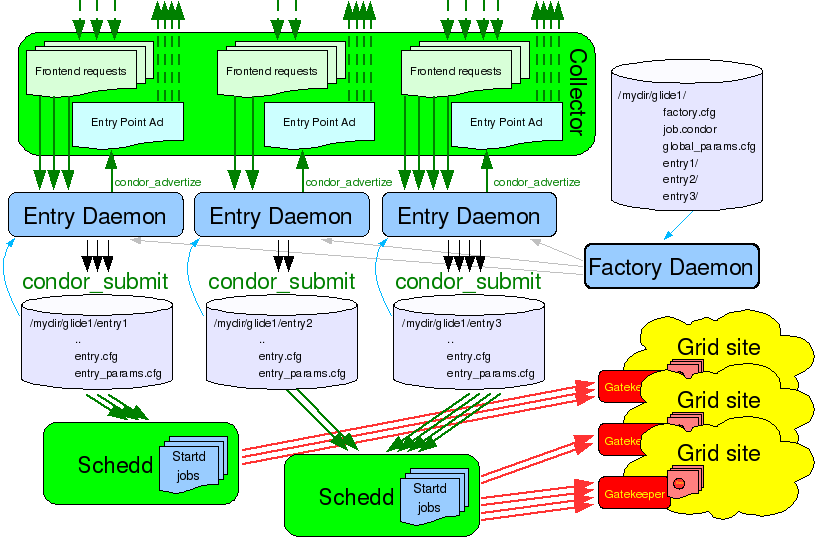

The Glidein Factory is composed of several entry points. The

Factory Daemon is really just a small process tasked to start and

monitor the Factory Entry Daemons. See picture in the next

section for a logical overview.

All daemons of a Glidein Factory share the same directory tree.

The root of the tree contains the main configuration files used by

the Factory daemon.

More details about the Glidein Factory Daemon internals can be

found here.

The Factory Entry Daemons

The Glidein Factory is composed of several Factory Entry Daemons,

each advertising itself and processing the incoming requests. See the

picture below for a logical overview.

All

daemons of a Glidein Factory share the same directory tree. The root

of the tree contains the common startup and configuration files,

while each entry point has a few additional configuration files on

its own. Each entry point is completely described by these files on

disk; the Factory Entry Daemons only extract information about entry

point attributes and supported parameters needed for advertising.

When glidein jobs are submitted, only the frontend provided

parameters need to be given to the glidein startup script, as the

script itself will autonomously gather all the other

information.

More details about the Factory Entry Daemon

internals can be found here.

The glidein startup script

As said in the overview,

a glidein is essentially a properly configured Condor startd.

However, somebody needs to do that configuration. So we need a job

startup script that will do the work.

A startup script needs

to perform several tasks:

- check that the working environment on the worker node is

reasonable (else user jobs will fail)

- obtain the Condor binaries

- configure Condor

- prepare the environment for Condor

start Condor

Given the complexity of the task, and for the sake of flexibility,

it makes sense to split the script in several pieces. So the glidein

job is composed of several pieces, including the startup script

pieces, the Condor binaries, and a base configuration file.

However, having a Grid job with data files can represent a

challenge; each Grid flavor treats data in a different way!

To

make the system as general as possible, the Glidein Factory

requires the use of a Web Server to distribute its data. This version

of the Glide based Factory was tested with Apache

and TUX,

but any other web server should work just well, as only static file

delivery is required.

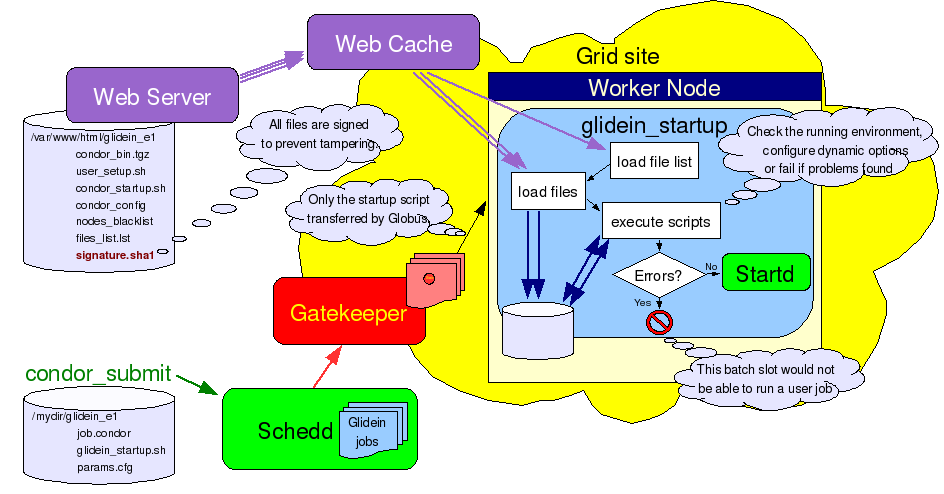

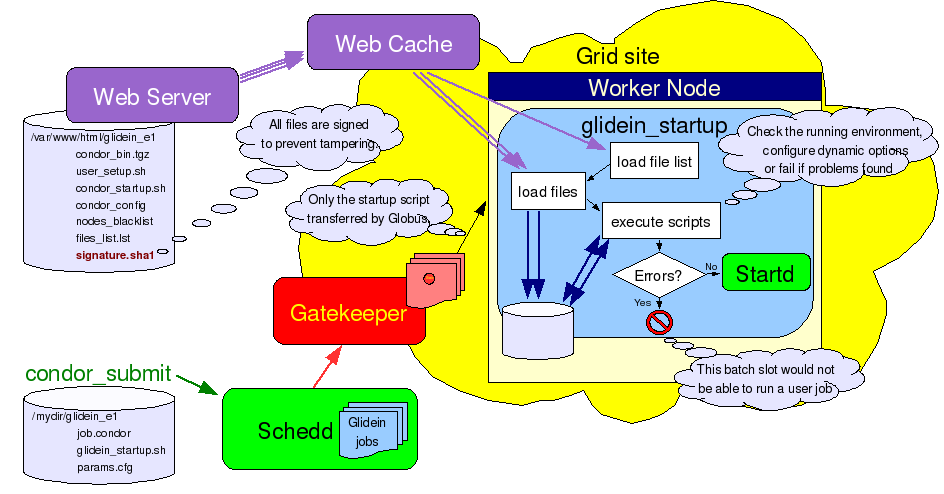

A general overview of how a glidein

starts up is given in the picture below.

The

task of the basic startup script (called glidein_startup.sh)

is thus reduced to loading the other files, including the support

scripts, the base config files and the Condor binaries. The list of

files to load is obtained from the Web server as one of the first

steps, making the startup script completely generic.

Please

notice two things. First, all the files transfered over the Web are

signed using sha1sum. This

prevents a hacker from tampering with the files while in transit.

This is especially important for executables and scripts (to prevent

arbitrary code to be executed), but is useful for configuration files

too.

The signature checking is implemented in two steps:

- The signature of all the files to be transfered is saved in a

file called signature.sha1 and

stored on the Web server. The signature of the signature file is

then passed as one of the parameters to the startup script.

The startup script loads the signature file from the Web

server and verifies its signature. All other downloads, including

the file containing the list of other files, is checked against the

values in the signature file. See the pseudo-code below.

wget http://head.fnal.gov/glidein_g1/signature.sha1

sha1sum known_sha1 signature.sha1

if $?!=0 then

exit 1

fi

grep files_list signature.sha1 > filelist.sha1

wget http://head.fnal.gov/glidein_g1/files_list.lst

sha1sum -c filelist.sha1

if $?!=0 then

exit 2

fi

for file in files_list.lst do

wget http://head.fnal.gov/glidein_g1/$file

done

sha1sum -c signature.sha1

if $?!=0 then

exit 3

fi

launch scripts

The second point I would like to stress are the advantages that

come from using standard Web technologies. Web technologies are

widely used nowadays and there is a plethora of tools that can be

readily used. In our case, we can reduce the network load and speed

startup times by using a Web cache near the worker nodes, if

available. The Glidein Factory was tested with Squid,

but other products should work as well. It is also worth mentioning

that both OSG and gLite has expressed interest in deploying a Squid

server on every Grid site.

More details about the startup

script internals and support scripts provided by the current

implementation can be found here.

Glidein Factory management

To run a Glidein Factory, you need to perform two steps:

- Create the configuration directories.

- Run the Glidein Factory Daemon who will internall start the

Entry Daemons.

Warning: Never change the files in an entry point by hand after it has been created!.

Use the reconfig tools described below instead.

Creating a new configuration directory

tree

To create an entry point you need:

- The directory tree containing the entry points is created by

launching the command

-

glideinWMS/creation/create_glidein

-

Another option is to use the provided

installer.

Technical details of the

configuration are described on a dedicated page .

Warning: Never change the files in an entry point by hand after it has been created!.

Use the reconfig tools described below instead.

Starting a Glidein Factory Daemon

The glidein factory comes with a init.d style startup script.

Glidein factory starting and stopping is handled by

<glidein directory>/factory_startup

start|stop|restart

You can check that the factory is actually running with

<glidein directory>/factory_startup

status

See the monitoring section on how to check other info.

Downtime handling

Starting with v1_3, the glidein factory supports the dynamic

handling of downtimes.

Downtimes can be handled both at the

factory and at the entry level.

Downtimes are useful when one or more Grid sites are known to have

issues (can be anything from scheduled maintenance to a storage

element corrupting user files).

In this case the factory

administrator can temporarily stop submitting glideins to the

affected sites, without stopping the factory as a whole.

Downtimes are handled with

- <glidein directory>/factory_startup

up|down 'factory'|<entry name> [<delay>]

If the entry points are linked to an information system (BDII or RESS), you can also

use the following command to automatically adjust the downtime based on the information

provided by that information system (i.e. the entry point will be marked up

if and only if the information system claims the gatekeeper is accepting new jobs)

- <glidein directory>/factory_startup

infosysdown

Caution: An admin can handle downtimes both at the entry and at

the factory level.

Please be aware that both will be used.

Note: Advanced users may also want to have a look at

- glideinWMS/factory/manageFactoryDowntimes.py

Getting configuration information

To get the list of entries defined in the factory, use

- <glidein directory>/factory_startup

info -entries -CE

To see which entries are currently active, use

- <glidein directory>/factory_startup

statusdown entries

PS: Make sure the whole factory is not disabled, by using

- <glidein directory>/factory_startup

statusdown factory

Updating a configuration directory

tree

The files in an entry point must never be changed by hand, after the directory structure has been created.

The reason why manual changes are so problematic are two fold:

There is only one file that is neither signed nor cached and can

be thus modified; the blacklisting file called nodes.blacklist.

This one can be used to temporarily blacklist malfunctioning nodes

that would pass regular sanity checks (for example: memory corruption

or I/O errors), while waiting for the Grid site admin to take action.

The proper procedure to update an entry point is

to make a copy of the official configuration file (i.e. glideinWMS.xml)

and run

<glidein directory>/factory_startup

reconfig config_copy_fname

This will update the directory tree and restart the factory and entry dameons.

Please notice that if you make any errors in the new configuration file, the reconfig

script will throw an error and do nothing. So you should never need to worry about

corrupting the installation tree using this tool.

NOTE: The reconfig tool does not kill the factory in case of errors.

Hence is recommended that you disable any entry points that will not be used.

Testing with a local glidein

In case of problems, you may want to test a glidein by hand.

Move to the glidein directory and run

./local_start.sh entry_name

fast -- GLIDEIN_Collector yourhost.dot,your.dot,domain

. This will start a glidein on the local machine and pointing to the

yourhost.your.domain collector.

Please make sure you have a valid Grid environment set up,

including a valid proxy, as the glidein needs it in order to work.

Upgrading to a new version of glideinWMS

Upgrading a factory configuration tree to a new version of glideinWMS can be done in two ways:

- Installing the new glideinWMS code in a separate directory, and create a new configuration

tree as explained above.

The configuration file (glieinWMS.xml) of the existing configuration tree can be used as a

base for the new one; only the glidein_ name needs to be changed.

See the configuration page for more defaults.

- Overwrite the glideinWMS installation and reconfig.

If getting the code from CVS, a cvs update -r version will update the code.

Warining: Check the revision history before doing this. Not all versions are compatible.

Which option you choose is really up to you; the second one is easier, but the first one is cleaner and easier to revert back.

Glidein Factory Monitoring

Monitoring is an essential part of any service. One has to monitor

to both maintain the health of the system and to tune the system for

anticipated growth. The various ways you can monitor a Glidein

Factory are described below.

Log files

Both the Factory Daemon, the Entry Daemons, Condor-G and the Glideins write

extensive log files. The logs are kept for a week and then deleted.

The Glidein Factory Daemon log files are located in

- <glidein

directory>/log/factory_info.<date>.log

<glidein

directory>/log/factory_err.<date>.log

Each Entry Daemon has its log files in

- <glidein directory>/entry_<entry

name>/log/factory_info.<date>.log

<glidein directory>/entry_<entry

name>/log/factory_err.<date>.log

For each client an Entry Daemon is serving, one Condor-G job log is used

- <glidein directory>/entry_<entry

name>/log/condor_activity_<date>_<client-name>.log

Each Glidein also writes a couple of log files, that get transfered back to

the factory node after the glidein terminates. The log files are named:

- <glidein directory>/entry_<entry

name>/log/job.<condor-g job nr>.out

<glidein directory>/entry_<entry

name>/log/job.<condor-g job nr>.err

The Glidein .out files are readable using any text editor, while the .err files

contain the compressed logs of the condor daemons.

Use the following commands to extract that information in simple text format

-

glideinWMS/factory/tools/cat_MasterLog.py <err_fname>

glideinWMS/factory/tools/cat_StartdLog.py <err_fname>

glideinWMS/factory/tools/cat_StarterLog.py <err_fname>

Note: If you need Condor log files from a still running glidein, use the following

Condor command

-

<condor dir>/sbin/condor_fetchlog -pool <pool collector> <glidein slot name> -startd MASTER|STARTD|STARTER

The Entry Daemons also summarize the information about completed glideins into

- <glidein directory>/entry_<entry

name>/log/completed_jobs_<date>.log

Looking at ClassAds

As explained in the Data exchange

overview, the Entry Point Daemons expose a lot of monitoring

information in the ClassAds sent to the WMS collector. While this may

not be the most user friendly interface, most of the monitoring

information you'll ever need is present there.

On top of the Condor provided tools,

the factory provides two tools to look at the ClassAds; the first one returns a human readable, but limited text,

while the other provides a complete XML-formated output

-

glideinWMS/tools/wmsTxtView.py

glideinWMS/tools/wmsXMLView.py

Web monitoring

The Glidein Factory Daemon and the Entry Point Daemons also create

XML documents on a Web accessible area (see <glidein><monitor

base_dir> on the configuration

page). These xml files contain the same information you would get

by fetching the ClassAds, in

addition to a short summary.

Historical Web monitoring

The Entry Point Daemons will also create RRD

databases and associated graphs for a period of up to one year.

This way, one can easily monitor the evolution of the system.

Scalability considerations

To be written. Will be expanded in future versions of the manual.

For now, look at the installation instructions.

Repository

CVSROOT

cvsuser@cdcvs.fnal.gov:/cvs/cd

Package(s)

glideinWMS/factory

glideinWMS/creation |

Author(s)

Since Aug. 14th - Igor Sfiligoi (Fermilab Computing

Division) |