WMS Factory

WMS Pool and Factory Installation

1. Description

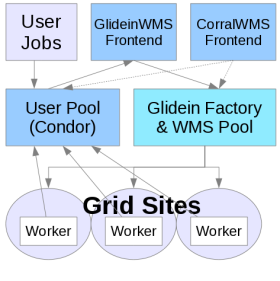

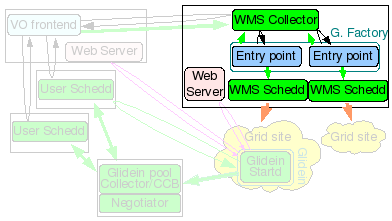

The glidein Factory node will be the

HTCondor Central Manager

for the WMS, i.e. it will run the HTCondor Collector and Negotiator daemons, but it will also act as a

HTCondor Submit node

for the glidein Factory, running HTCondor schedds used for Grid submission.

The glidein Factory node will be the

HTCondor Central Manager

for the WMS, i.e. it will run the HTCondor Collector and Negotiator daemons, but it will also act as a

HTCondor Submit node

for the glidein Factory, running HTCondor schedds used for Grid submission.

On top of that, this node also hosts the Glidein Factory daemons. The Glidein Factory is also responsible for the base configuration of the glideins (although part of the configuration comes from the Glidein Frontend).

Note: The WMS Pool collector and Factory must be installed on the same node.

Note: This document refers to the tarball distribution of the Glidein Factory. If you want to install or installed via RPM please refer to the OSG RMP Guide.

2. Hardware requirements

| Installation Size | CPUs | Memory | Disk |

| Small | 1 | 1GB | ~10GB |

| Large | 4 - 8 | 2GB - 4GB | 100+GB |

A major installation, serving tens of sites and several thousand glideins will require

several CPUs (recommended 4-8: 1 for the HTCondor damons, 1-2 for the glidein

Factory daemons and 2 or more for HTCondor-G schedds) and a reasonable amount of

memory (at least 2GB, 4GB for a large installation to provide some

disk caching).

A major installation, serving tens of sites and several thousand glideins will require

several CPUs (recommended 4-8: 1 for the HTCondor damons, 1-2 for the glidein

Factory daemons and 2 or more for HTCondor-G schedds) and a reasonable amount of

memory (at least 2GB, 4GB for a large installation to provide some

disk caching).

The disk needed is for binaries, config files, log files and Web monitoring data (For just a few sites, 10GB could be enough, larger installations will need 100+GB to maintain a reasonable history). Monitoring can be pretty I/O intensive when serving many sites, so get the fastest disk you can afford, or consider setting up a RAMDISK.

It must must be on the public internet, with at least one port open to the world; all worker nodes will load data from this node trough HTTP. Note that worker nodes will also need outbound access in order to access this HTTP port.

3. Needed software

See the prerequisites page for a list of software requirements.

4. Before you begin...

4.1 Required Users

The installer requires several non-privileged users. These should be created prior to running the GlideinWMS installer.

| User | Notes |

| WMS Pool - HTCondor User | If privilege separation is not used, then install as the same user as the Factory |

| Factory | The Factory will always be installed as a non-prvileged user, whether or not privilege separation is being used. |

| One user per Fontend (See notes) | If you are using privilege separation, you will need a user for each Frontend that will be communicating with the Factory. Otherwise, no new users need to be created for the Frontends. |

4.2 Required Certificates/Proxies

The installer will ask for several DNs for GSI authentication. You have the option of using a service certificate or a proxy. These should be created and put in place before running the installer. The following is a list of DNs the installer will ask for:

- WMS Pool cert/Proxy DN

- Glidein Frontend Proxy DN (cannot use a cert here)

Note 1: In some places the installer will also ask for nicknames to go with the DNs.

These nicknames are the HTCondor UID used in its configuration and mapfile.

The name given doesn't

matter, EXCEPT if you are using privilege separation. Then on the WMS Pool the nickname for

each Glidein Frontend must be the UNIX username that you created for that Frontend.

Note 2: The installer will ask if these are trusted HTCondor Daemons. Answer 'y'.

4.3 Required Directories

When installing the Factory you will need to specify the directory location for various items. Due to new restrictions on the directory permissions, it is no longer recommended that you install GlideinWMS into the /home directory of the user. We recommend putting these and most of the other directories in /var. All the directories in /var have to be created as root so you will have to create the directories ahead of time.

Note: The web data must be stored in a directory served by the HTTP Server.

4.4 Miscellaneous Notes

You will need the OSG Client (formerly VDT client). You need to install it yourself using either the RPM or the tarball provided by OSG (see here).

If you installed OSG Client using the RPM, the binaries will be in the system path. If you installed it using the tarball, then answer 'y' when asked if you want OSG_VDT_BASE defined globally. Unless you want to force your users to find and hard code the location.

By default, match authentication will be used. If you have a reason not to use it, be sure to set to False the USE_MATCH_AUTH attribute in both the Factory and Frontend configuration files.

5. GlideinWMS Pool installation guide

If you are installing privilage separation, you need to install GlideinWMS Pool and Schedds as root. Otherwise, they can be installed as a non-privileged user. The WMS Pool (its Collector) needs access to GSI credentials. To install the WMS Pool:

./manage-glideins --install wmscollector --ini glideinWMS.ini

| Attribute | Example | Description | Comments |

| install_type | tarball | Indicates this is a HTCondor tarball installation. At this time, for the WMS Collector, only tarball installations are supported. |

Valid values: tarball |

| hostname | wmscollectornode.domain.name | hostname for WMS Pool. | The WMS Pool and Factory must be colocated (on the same host). |

| username | condor (or whatever non-root user you decide on) | UNIX user account that this services will run under. DO NOT use "root". |

For security purposes, this value should always be a non-root user. However, if privilege separation is used (see the privilege_separation option), the manage-glideins script itself will need to be run as root (see above), since the HTCondor switchboard requires some files to be owned by root and some files to be owned by the non-superuser username for privilege separation to work correctly. |

| service_name | condor-wms | Used as the 'nickname' for the GSI DN in the condor_mapfile of other services. | . |

| condor_location | /path/to/condor-location | Directory in which the condor software will be installed. | IMPORTANT: The WMS Pool and Factory are always installed on the same node. The condor_location must not be a subdirectory of the Factory's install_location, logs_dir or client_log(proxy)_dir. They may share the same parent, however. |

| collector_port | 9618 (HTCondor default) | Defines the HTCondor Collector port for the WMS Pool. | Optional: default is 9618 (HTCondor default) If multiple glidein services are installed on the same node, this should be unique for each service. |

| privilege_separation | y | See the HTCondor Privilege Separation Documentation for more information | Valid values: * y - privilege separation is used * n - privilege separation is not used |

| frontend_users | frontend_service_name : unix_account | Maps the vofrontend's service name to the UNIX account that has been created for it. Only one Frontend service can be specified on install. | The format is: service_name : unix account If privilege_separation is specified, this must specify the unique UNIX user account you set up for that Frontend service. If privilege_separation is not specified, this must be the Factory username. |

| x509_cert_dir | /path/to/certificates-location | The directory where the CA certificates are maintained. | The installer will validate for the precesence of *.0 and *.r0 files. If the CAs are installed from the VDT distribution, this will be the VDT_LOCATION/globus/TRUSTED_CA directory. |

| x509_cert | /path-to-cert-location/cert.pm | The location of the certificate file being used. | This file must be owned by the user installing (starting/stopping) this service. Permissions should be 644 or 600. |

| x509_key | /path-to-cert-location/key.pm | The location of the certificate key file being used and associated with the certtificate defined by the x509_cert option above. | This file must be owned by the user installing (starting/stopping) this service. Permissions should be 600 or 400. |

| x509_gsi_dn | dn-subject-of-x509_cert-using-openssl | This is the identity of the certificate used by this service to contact the other HTCondor based GlideinWMS services. |

This is the subject of the certificate

(x509_cert option).

openssl x509 -subject -noout -in [x509_cert]It is used to populate the condor_config file GSI_DAEMON_NAME and condor_mapfile entries of this and the other GlideinWMS services as needed. |

| condor_tarball | /path/to/condor/tarballs/condor-version.x-linux-x86-rhelX-dynamic.tar.gz | Location of the condor tarball. | The installation script will perform the installation of condor using this tarball. It must be a zipped tarball with a *.tg.tz name. |

| condor_admin_email | whomever@email.com | The email address to get HTCondor notifications in the event of a problem. | Used in the condor_config.local only. |

| number_of_schedds | 5 | The desired number of schedds to be used. | There must be at least 1 schedd. |

| install_vdt_client | n | This should be set as n - so the installler will not attempt to install the OSG Client You must pre-install OSG Client |

Choose 'n'. |

| vdt_location | /path/to/glidein/vdt | The location of the OSG/VDT client software. | Leave this blank, since the install_vdt_client option should always be 'n' |

| glideinwms_location | /path/to/glideinWMS | Directory of the GlideinWMS software. | Since this is a HTCondor service only, this software is only used during the installation process. |

For example configuration files, see here.

If you are using privilege separation, please control the content of the PrivSep configuration file (/etc/condor/privsep_config) and make sure that the callers and targets are colon separated lists including respectively all the users (and default groups). The Factory runs under and the ones representing the Frontend. This should match the security_classes section in the Factory configuration (glideinWMS.xml).

The installer allows you to automatically start the HTCondor daemons. To start them on your own, source the condor env script and execute:

/path/to/condor/location/condor start

To stop the HTCondor daemons, source the condor env script and execute:

/path/to/condor/location/condor stop

6. Glidein Factory installation guide

The Glidein Factory requires a proxy from the Frontend to use for submitting glideins. This proxy must (at any point in time) have

a validity of at least, the longest expected job being run by the GlideinWMS and not less than 12 hours.

How you keep this proxy valid (via MyProxy, kx509, voms-proxy-init from a local certificate, scp from other nodes, or other methods),

is beyond the scope of this document.

The Glidein Factory itself should be installed as a non privileged user. The installer will create the configuration file used to configure and run the Factory, although some manual tunning will probably be needed.

The manage-glideins script should be run as the Factory user when installing the WMS Factory (see the username option below).

./manage-glideins --install factory --ini glideinWMS.ini

| Attribute | Example | Description | Comments |

| install_type | tarball | Indicates this is a HTCondor tarball installation. At this time, for the Factory, only tarball installations are supported. | Valid values: tarball. |

| hostname | wmscollector.domain.name | hostname for Factory. | The WMS Pool and Factory must be colocated. |

| username | factory user(non-root account) | UNIX user account that this service will run under. DO NOT use "root". | For security purposes, this value should always be a non-root user. Although the WMS Pool and Factory must be colocated, they can be run as independent users. |

| service_name | factory-wms | Used as the 'nickname' for the GSI DN in the condor_mapfile of other services. | . |

| install_location | /path/to/glidein/factory | HOME directory for the Factory software. | When the Factory is created the following files/directories will

exist in this directory: * factory.sh - environment script * glidein_[instance_name].cfg - the Factory configuration file * glidein_[instance_name] - directory containing the Factory files The install script will create this directory if it does not exist. |

| logs_dir | /path/to/factory/logs | User settable location for all Factory log files. | Beneath this location there will be multiple sets of logs:

* for the Factory as a whole

* for each entry point the Factory utilizes

The install script will create this directory if it does not exist. |

| client_log_dir client_proxy_dir |

/path/to/client/log_location /path/to/client/proxy_location |

User settable location for all client (VOFrontend) log and proxy files. |

If privilege separation is used, * then the entire path (inclusive of this directory) must be root-writable-only (0755 and owned by root). * these directories cannot be sub-directories of the Factory's install_location or logs_dir. If privilege separation is not used, * then the directory can be independent, or nested as a subdirectory, of the Factory's install_location or logs_dir. If the above requirements are satisfied, the install script will create the necessary directories. If not, a permissions error will likely result. |

| instance_name | v2_5 | Used in naming files and directories. | . |

| use_glexec | y | Used to specify how user submitted jobs (not glidein pilots) are authorized on the WN nodes for an entry point |

With gLexec, the individual user's proxy submitted with their job is used to authorize the job and is reflected in the accounting. Without gLexec, the glidein pilot job's proxy is used and only that user account is reflected in the accounting. Valid values: * y - downloads and uses gLexec. * n - glidein pilot proxy is used |

| use_ccb | n | Indicates if CCB should be used or not. | Valid values: * y - uses CCB * n - does not use CCB |

| ress_host | osg-ress-4.fnal.gov | Identifies the ReSS server to be used to select entry points (CEs) to submit glidein pilot jobs to. | The only validation performed is to verify if that server exists. Valid OSG values: * osg-ress-1.fnal.gov - OSG Production * osg-ress-4.fnal.gov - OSG ITB |

| entry_vos | cms, dzero | A comma delimited set of VOs used to select the entry points that glideins can be submitted to. | These are the used as the initial criteria in querying ReSS for glidein entry points. |

| entry_filters | (int(GlueCEPolicyMaxCPUTime) <(25*60)) | An additional entry point (CE) filter for insuring that specific resources are available. | After the initial set of entry points have been selected using the entry_vos criteria, these filters are applied. The format is a python expression using Glue schema attributes. |

| install_vdt_client | n | This should be set as n - so the installler will not attempt to install the OSG Client You must pre-install OSG Client |

Choose 'n'. |

| vdt_location | /path/to/glidein/vdt | The location of the OSG/VDT client software. | Leave this blank, since the install_vdt_client option should always be 'n' |

| glideinwms_location | /path/to/glideinWMS | Directory of the GlideinWMS software. | This software is used for both the installation and during the actual running of this glidein service. |

| web_location | /var/www/html/factory |

Specifies the location for the monitoring and staging data that must

be accessible by web services. The installer will create the

following directories in this location: 1. web_location/monitor 2. web_location/stage |

Important: This should be created before installing this service as the service's username and the web server user are generally different. This script will not be able to create this directory with proper ownership. |

| web_url | http://%(hostname)s:port | Identifies the url used by the glidein pilots to download

necessary software and to record monitoring data. In order to insure consistency, the installer will take the unix basename of the web_location and append it to the web_url value. So, for the value shown below in the web_location, the actual value used by the glidein pilots, will be web_url/factory/stage(monitor). |

Important: It may be a good idea to verify that the port specified is accessible from off-site as some sites restrict off-site access to some ports. |

| javascriptrrd_location | /path/to/javascriptrrd | Identifies the location of the javascript rrd software. | This installation must include the flot processes in the

parent directory. |

For example configuration files, see here.

If you followed the example above, you ended up with a configuration file in

/path/to/glidein/working/directory/glidein_<instance_name>.cfg/glideinWMS.xml.

This will contain a subset of values that will be in the final configuration file.

The installer will allow to you automatically "create" the glidein. This means it will create all the directories and files in the

working directory that the Factory processes will use. These will be located in:

/path/to/glidein/working/directory/glidein_<instance_name>/*.

If you choose not to do this in the installer, you can create them on your own by sourcing the Factory.sh file and then running

cd glideinWMS/creation

./create_glidein <config file>

Warning: Never change the files in an entry point by hand after it has been created!

Use the reconfig tools described below instead.

At this point, your Factory installation is complete and you can start the Factory. See below for the commands for running a Factory.

7. Factory Management Commands

Note: If you installed the Factory via RPM some files or commands will differ. Please refer to the OSG RMP Guide.

7.1 Starting and Stopping

The Glidein Factory comes with a init.d style startup script.

Glidein Factory starting and stopping is handled by

<glidein directory>/factory_startup start|stop|restart

You can check that the Factory is actually running with

<glidein directory>/factory_startup status

To get the list of entries defined in the Factory, use

<glidein directory>/factory_startup info -entries -CE

To see which entries are currently active, use

<glidein directory>/factory_startup statusdown entries

To see the status of the overall Factory, use

<glidein directory>/factory_startup statusdown factory

See the monitoring section for more information and tools to monitor status.

7.2 Changing the Factory configuration

The files in an entry point must never be changed by hand after the directory structure has been created. The reason why manual changes are so problematic are two fold:

- The first problem are signatures. Any change requires the change of the signature file, that in turn gets a new signature. Since the signature is one of the parameters of the startup script, all glideins already in the queue will fail.

- The second problem is caching. For performance reasons, most Web caches don't check too often if the original document has been changed; a glidein could thus get an old copy of a file and fail the signature check.

There is only one file that is neither signed nor cached and can be thus modified; the blacklisting file called nodes.blacklist. This one can be used to temporarily blacklist malfunctioning nodes that would pass regular sanity checks (for example: memory corruption or I/O errors), while waiting for the Grid site admin to take action.

The proper procedure to update an entry point is to make a copy of the official configuration file (i.e. glideinWMS.xml) as a backup. Then edit the config file and run

<glidein working directory>/factory_startup reconfig config_copy_fname

This will update the directory tree and restart the Factory and entry dameons. (If the Factory wasn't running at the time of reconfig, it will only update the directory tree.)

Please, notice that if you make any errors in the new configuration file, the reconfig script will throw an error and do nothing. If you executed the reconfig command while the facotry was running, it will revert to the last config file and restart with those settings. As long as you use this tool, you should never corrupt the installation tree.

The factory_startup script contains a default location for the Factory configuration and is set to the location used for the initial install. This allows you to not have to specify the config location when doing a reconfig. To change the default location in the file, run the command:

<glidein working directory>/factory_startup reconfig config_copy_fname update_default_cfg

NOTE: The reconfig tool does not kill the Factory in case of errors. It is also recommended that you only disable any entry points that will not be used. Never remove them from the config file.

7.4 Downtime handling

The glidein Factory supports the dynamic handling of downtimes at the Factory, entry, and security class level.

Downtimes are useful when one or more Grid sites are known to have

issues (can be anything from scheduled maintenance to a storage

element corrupting user files).

In this case the Factory

administrator can temporarily stop submitting glideins to the

affected sites, without stopping the Factory as a whole.

The list of current downtimes are listed in the Factory

file in glideinWMS.downtimes

Downtimes are handled with

<glidein directory>/factory_startup up|down -entry 'factory'|<entry name> [-delay <delay>]

Caution: An admin can handle downtimes from Factory, entry, and security class levels.

Please be aware that both will be used.

More advanced configuration can be done with the following script:

glideinWMS/factory/manageFactoryDowntimes.py -dir factory_dir -entry ['all'|'factory'|'entries'|entry_name] -cmd [command] [options]

You must specify the above options for the Factory directory, the entry you wish to disable/enable, and the command to run. The valid commands are:

- add - Add a scheduled downtime period

- down - Put the Factory down now(+delay)

- up - Get the Factory back up now(+delay)

- check - Report if the Factory is in downtime now(+delay)

- vacuum - Remove all expired downtime info

Additional options that can be given based on the command above are:

- -start [[[YYYY-]MM-]DD-]HH:MM[:SS] (start time for adding a downtime)

- -end [[[YYYY-]MM-]DD-]HH:MM[:SS] (end time for adding a downtime)

- -delay [HHh][MMm][SS[s]] (delay a downtime for down, up, and check cmds)

- -security SECURITY_CLASS

(restricts a downtime to users of that security class)

(If not specified, the downtime is for all users.) - -comment "Comment here" (user comment for the downtime. Not used by WMS.)

This script can allow you to have more control over managing downtimes, by allowing you to make downtimes specific to security classes, and adding comments to the downtimes file.

Please note that the date format is currently very specific. You need to specify dates in the format "YYYY-MM-DD-HH:MM:SS", such as "2011-11-28:23:01:00."

7.5 Testing with a local glidein

In case of problems, you may want to test a glidein by hand.

Move to the glidein directory and run

./local_start.sh entry_name fast -- GLIDEIN_Collector yourhost.dot,your.dot,domain

This will start a glidein on the local machine and pointing to the yourhost.your.domain collector.

Please make sure you have a valid Grid environment set up, including a valid proxy, as the glidein needs it in order to work.

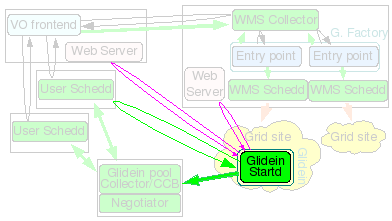

8. Verification

Verify that HTCondor processes are running by:

ps -ef | grep condor

You should see several condor_master and condor_procd processes. You should also be able to see one schedd process for each secondary schedd you specified in the install.

Verify GlideinWMS processes are running by:

ps -ef | grep factory_username

You should see also a main Factory process as well as a process for each entry.

You can query the WMS collector by (use .csh if using c shell):

$ source /path/to/condor/location/condor.sh

$ condor_q

$ condor_q -global

$ condor_status -any

The condor_q command queries any jobs by schedd in the WMS pool (-global is needed to show grid jobs).

The condor_status will show all daemons and glidein classads in the condor pool. Eventually, there will be

glidefactory classads for each entry, glideclient classads for each client and credential, and glidefactoryclient classads

for each entry-client relationship. The glideclient and glidefactoryclient classads will not show up unless a Frontend is

able to communicate with the WMS Collector.

MyType TargetType Name

glidefactory None FNAL_FERMIGRID_ITB@v1_0@mySite

glidefactoryclient None FNAL_FERMIGRID_ITB@v1_0@mySite

glideclient None FNAL_FERMIGRID_ITB@v1_0@mySite

Scheduler None xxxx.fnal.gov

DaemonMaster None xxxx.fnal.gov

Negotiator None xxxx.fnal.gov

Scheduler None schedd_glideins1@xxxx.fna

DaemonMaster None schedd_glideins1@xxxx.fna

Scheduler None schedd_glideins2@xxxx.fna

DaemonMaster None schedd_glideins2@xxxx.fna