- Example Configuration

- Frontend Configuration

- Singularity attributes

- Customizing the glidein Startup

- Using multiple proxies

- Using multiple wms collectors

- Using multiple user pool collectors/HTCondor High Availability (HA) support

- Using Singularity

- Starting the Daemon

- XSLT Plugins to extend configuration

Example Configuration

Below is an example Frontend configuration xml file. Click on any piece for a more detailed description.<log_retention ></frontend>

<process_logs ></log_retention >

<process_log extension="info" max_days="7.0" max_mbytes="100.0" min_days="3.0" msg_types="INFO" backup_count="5" compression="gz" /></process_logs >

<process_log extension="debug" max_days="7.0" max_mbytes="100.0" min_days="3.0" msg_types="DEBUG,ERR,WARN" backup_count="5" />

<match match_expr="True" start_expr="True" policy_file="/path/to/python-policy-file">

<factory query_expr="True"></match>

<match_attrs /></factory>

<collectors>

<collector DN="/DC=org/DC=doegrids/OU=Services/CN=factory-server.fnal.gov" comment="" factory_identity="factoryuser@factory-server.fnal.gov" my_identity="frontenduser@frontend-server.fnal.gov" node="factory-server.fnal.gov:8618" /></collectors>

<job comment="" query_expr="(JobUniverse==5)&&(GLIDEIN_Is_Monitor =!= TRUE)&&(JOB_Is_Monitor =!= TRUE)">

<match_attrs /></job>

<schedds>

<schedd DN="/DC=org/DC=doegrids/OU=Services/CN=userpool.fnal.gov" fullname="userpool.fnal.gov" /></schedds>

<schedd DN="/DC=org/DC=doegrids/OU=Services/CN=userpool.fnal.gov" fullname="schedd_jobs1@userpool.fnal.gov" />

<schedd DN="/DC=org/DC=doegrids/OU=Services/CN=userpool.fnal.gov" fullname="schedd_jobs2@userpool.fnal.gov" />

<monitor base_dir="/var/www/html/vofrontend/monitor" flot_dir="/opt/javascriptrrd-0.6.3/flot" javascriptRRD_dir="/opt/javascriptrrd-0.6.3/src/lib" jquery_dir="/opt/javascriptrrd-0.6.3/flot" />

<monitor_footer display_txt="Legal Disclaimer" href_link="/site/disclaimer.html" />

<security classad_proxy="/etc/grid-security/vocert.pem" proxy_DN="/DC=org/DC=doegrids/OU=Services/CN=frontend-server.fnal.gov" proxy_selection_plugin="ProxyAll" security_name="frontenduser" sym_key="aes_256_cbc">

<credentials><stage base_dir="/var/www/html/vofrontend/stage" use_symlink="True" web_base_url="http://frontend-server.fnal.gov:9000/vofrontend/stage" />

<credential absfname="/tmp/x509up_u" security_class="frontend" trust_domain="OSG" type="grid_proxy" vm_id="123" vm_type="type1" pool_idx_len="5" pool_idx_list="2,4-6,10" /></credentials>

</security>

<work base_dir="/opt/vofrontend" base_log_dir="/opt/vofrontend/logs" />

<attrs>

<attr name="GLIDECLIENT_Rank" glidein_publish="False" job_publish="False " parameter="True" type="string" value="1" /></attrs>

<attr name="GLIDECLIENT_Start" glidein_publish="False" job_publish="False" parameter="True" type="string" value="True" />

<attr name="GLIDEIN_Expose_Grid_Env" glidein_publish="True" job_publish="True" parameter="False" type="string" value="True" />

<attr name="GLIDEIN_Glexec_Use" glidein_publish="True" job_publish="True" parameter="False" type="string" value="OPTIONAL" />

<attr name="USE_MATCH_AUTH" glidein_publish="False" job_publish="False" parameter="True" type="string" value="True" />

<groups>

<group name="main" enabled="True"></groups>

<config ignore_down_entries="True"></group>

<idle_glideins_per_entry max="100" reserve="5" /></config>

<idle_vms_per_entry curb="20" max="100" />

<idle_vms_total curb="500" max="1000" />

<running_glideins_per_entry max="2000" relative_to_queue="1.15" min="0" />

<running_glideins_total curb="30000" max="40000" />

<glideins_removal margin="0" requests_tracking="False" type="ALL" wait="0"/>

<match match_expr="True" start_expr="True" policy_file="/path/to/python-policy-file">

<factory query_expr="True"><security>

<match_attrs /></factory>

<collectors />

<job query_expr="True">

<match_attrs /></job>

<schedds />

</match>

<credentials /></security>

<attrs />

<files />

<files>

<file absfname="/opt/script/testSW.sh" after_entry="True" after_group="False" const="True" executable="True" untar="False" wrapper="False" /></files>

<file absfname="/opt/script/testP.sh" after_entry="True" after_group="False" const="True" executable="True" period="1800" untar="False" wrapper="False" />

<ccbs>

<ccb DN="/DC=org/DC=doegrids/OU=Services/CN=ccb.fnal.gov" node="ccb.fnal.gov" /></ccbs>

<ccb DN="/DC=org/DC=doegrids/OU=Services/CN=ccb2.fnal.gov" node="ccb2.fnal.gov:9620-9640" group="group2" />

<collectors>

<collector DN="/DC=org/DC=doegrids/OU=Services/CN=usercollector.fnal.gov" node="usercollector.fnal.gov" secondary="False" group="default" /></collectors>

<collector DN="/DC=org/DC=doegrids/OU=Services/CN=usercollector.fnal.gov" node="usercollector.fnal.gov:9620-9819" secondary="True" group="default" />

<collector DN="/DC=org/DC=doegrids/OU=Services/CN=usercollector2.fnal.gov" node="usercollector2.fnal.gov" secondary="False" group="ha" />

<collector DN="/DC=org/DC=doegrids/OU=Services/CN=usercollector2.fnal.gov" node="usercollector2.fnal.gov:9620-9919" secondary="True" group="ha" />

<config>

<idle_vms_total curb="800" max="1200" /></config>

<idle_vms_total_global curb="1000" max="1500" />

<running_glideins_total curb="35000" max="45000" />

<running_glideins_total_global curb="50000" max="60000" />

<high_availability check_interval="300" enabled="False"><ha_frontends></high_availability><ha_frontend frontend_name="vofrontend-v2_4"/></ha_frontends>

The Glidein Frontend Configuration

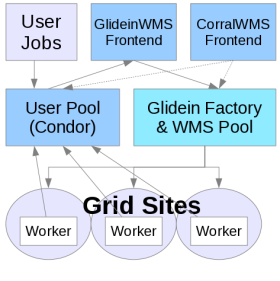

The Glidein Frontend configuration involves creating the configuration directory and files and then creating the daemons. As in the Glidein Factory set up, an XML file is converted into a configuration tree by a configuration tool.

For the installer to create the Glidein Frontend instance from the configuration directory and grid mapfile, the following objects can be defined:

- <frontend frontend_name="your name" downtimes_file="a file name" advertise_delay="seconds" loop_delay="nr" > advertise_with_tcp="True|False" advertise_with_multiple="True|False">The frontend_name is a combination of the Frontend and instance names specified during installation. It is used to create Glidein Frontend instance directory and files. The downtimes_file is a name of the internal file used by downtime feature, any legitimate file name that indicates the purpose will be sufficient. The delay parameters define how active the Glidein Frontend should be. Finally, advertise_with_tcp defines if TCP should be use to advertise the ClassAds to the Factory, and advertise_with_multiple can enable the condor_advertise -multiple option present in HTCondor 7.5.4 and up.

-

<frontend><log_retention><process_logs><process_log max_days="max days" min_days="min days" max_bytes="max bytes" backup_count="backup count" type="ALL" compression="gz"/>

The admin can configure one or more logs with any combination of following log message types:

- INFO: Informational messages about the state of the system.

- DEBUG: Debug message. These are additional informational messages that describe code execution in detail.

- ERR: Error messages. These may include tracebacks.

- WARN: Warning messages. These warn of conditions that were found that don't necessarily cause abnormal execution.

Log Retention and Rotation Policy:

Log files are rotated based on a time and size of the log files as follows:- If the log file size reaches max_bytes it will be rotated (NOTE: the value will be truncated and 0 means no rotation).

- If the log file size is less that max_bytes but the file is older than max_days it will be rotated.

- min_days is not used and is there for backwards compatibility.

- Rotated files may be compressed. Supported compressions are Gzip "gz" and Zip "zip". Default is no compression (empty string).

- After rotation, recent number of backup_count files will be kept and older ones are deleted. Defaults to 5.

- <frontend><match><factory><collectors><collector my_identity="joe@collector1.myorg.com" node="collector1.myorg.com" factory_identity="gfactory@gfactory1.my.org" DN="/DC=org/DC=doegrids/OU=Services/CN=collector/collectory1.my.org"/>This shows what WMS Collector the Glidein Frontend will map to. It is the mapped name for the identity of the ClassAd. It also tells what should be the identity used by the Frontend itself and the expected identity of the Factory. These attributes must be configured correctly, or else the Factory will drop requests from the Frontend, citing security concerns.

To configure the WMS collector for multiple (secondary) ports, refer to the Advanced HTCondor Configuration Multiple Collectors document.

The configuration required for the Frontend to communicate with the secondary WMS collectors is shown below:<collector DN="/DC=org/DC=doegrids/OU=Services/CN=collector/collectory1.my.org" comment="Primary collector"

-

factory_identity="gfactory@gfactory1.my.org" my_identity="joe@collector1.myorg.com"

node="collector1.myorg.com:primary_port"/>

-

factory_identity="gfactory@gfactory1.my.org" my_identity="joe@collector1.myorg.com"

node="collector1.myorg.com:secondary_port"/>

- <frontend><match><job query_expr="expression" >A HTCondor constraint to be used with condor_q when looking for user jobs. (like 'JobUniverse=?=5', i.e. consider only Vanilla jobs). If you want to consider all user jobs, this can be set to TRUE.

- <frontend><match><job><schedds><schedd fullname="schedd name" />When you provide the user pool collector to the installer, it will find all the available schedds. You can specify which schedds to monitor here for user jobs. The schedd fullname is the name under which schedd is registered with the user pool collector.

- <frontend><monitor base_dir="web_dir" flot_dir="web_dir" javascriptRRD_dir="web_dir" jquery_dir="web_dir" />The base_dir defines where the web monitoring is. The other entries point to where javascriptRRD, Flot and JQuery libraries are located.

-

<glidein><monitor_footer display_txt="Legal Disclaimer" href_link="/site/disclaimer.html" >

OPTIONAL: If the display text and link are configured, the monitoring pages will display the text/link at the bottom of the page.

- <frontend><security proxy_DN="/DC=org/DC=doegrids/OU=Service/CN=frontend/frontend1.my.org" classad_proxy="proxy_dir" proxy_selection_plugin="ProxyAll" security_name="vofrontend1">Grid proxy to use is located in the classad_proxy directory and you must specify the full path to the proxy. security_name signifies the name under which the Frontend is registered with the factory.

- <frontend><security><credentials><credential type="grid_proxy" absfname="proxyfile" [keyabsfname="keyfile"] [pilotabsfname="proxyfile"] security_class="frontend" trust_domain="id" [creation_script="creationcommand"] [update_frequency="time_left"] [remote_username="username"] [vm_id="id"] [vm_type="type"] [pool_idx_len="5"] [pool_idx_list="2,4-6,10"] />This section lists credentials used by the whole Frontend. You can have multiple credentials listed here and they are used by all groups. The type (authentication method in the Factory's entry) can be one of the following:

- grid_proxy: A x509 proxy stored in the absfname location.

- cert_pair: A x509 certificate pair with the cert in the absfname location and the certkey in the keyabsfname location.

- key_pair: A key pair stored with the public key in absfname location and the private key in keyabsfname location.

- username_password: A username/password combination with the username stored in a text at the absfname location and a password stored in a text file in the keyabsfname location.

- auth_file: Auth file or token stored as text at the absfname location.

Pool index length and list allows the admin to configure a list of credentials without having to list each one of them. The files must all be identical except for the numbers at the end. The index length value adds leading zeros. For example, if you have: absfname="path/foo" pool_idx_len="4" pool_idx_list="2,4-6" then you should have files foo0002, foo0004, foo0005, foo0006 in path/.

Security class determines what user the credential is associated with on the Factory side. The trust domain determines which entries this credential will be used on. The credential will only be submitted to sites that match this trust domain. Coordinate with your Factory operator in order to properly determine these values.

The attribute pilotabsfname is required for non-grid types. This specifies a proxy (distinct from the credentials used to actually submit) that is used for the pilot to connect back to the user pool. For instance, Amazon key pairs can be used to connect to cloud resources, but an actual x509 proxy will be needed for the glidein on the cloud to connect to the HTCondor user pool.

The attributes creation_script and update_frequency are optional attributes used to renew the credential. During the Frontend loop, the script creationcommand is invoked when time_left seconds or less are left to the credential.

The attribute remote_username is an optional attribute used with key pair authentication method. If specified it replaces the user name specified in the Factory entry using the USERNAME@HOST:PORT string of the gatekeeper attribute. The username has to be specified in at least one of these two places. - <frontend><stage base_dir="web_dir" web_base_url="URL" />The location of the web server directories. It is the staging area where the files needed to run you job are located (i.e. condor). You may need to change this according to your requirements.

- <frontend><work base_dir="directory" />This defines the path to the Glidein Frontend directory.

- <frontend><files><file absfname="filepath" relfname="filename" prefix="cron_prefix" executable="boolean" after_group="boolean" period="seconds(int)" />The file parameters are used to specify the location, the download/execution order and attributes of additional files. The system (VO Frontend) is already transferring some files, e.g. grid mapfile. This can be used to add custom scripts to the VO Frontend (either for all glideins for this frontend or only for a group). See below or the page dedicated to writing custom scripts for more information. A list of all the possible attributes and their values is in the custom_code section of the Factory configuration document.

- <frontend><attrs><attr name="attr_name" value="value" parameter="True" type="string" glidein_publish="boolean" job_publish="boolean" >The following three attributes are required to be set in the Frontend requests and/or published for jobs. Others can be specified as well.

- <attr name="GLIDEIN_Collector" >The contains the name of the pool collector, i.e. mymachine.mydomain.

- <attr name="GLIDEIN_Expose_Grid_Env" >This determines if you want to expose the user to the grid environment.

- <attr name="USE_MATCH_AUTH" >This determines whether or not you want to use match authentication. You specify the match expression in a group section of the config file.

- <attr name="GLIDEIN_Glexec_Use" >This determines whether or not you want to mandate the use of GLEXEC. Possible values are NEVER (do not use GLEXEC) or OPTIONAL (use GLEXEC if the site is configured with it) or REQUIRED (Always use GLEXEC). Mandating the use of GLEXEC also enforces the Factory to submit jobs to sites that have GLEXEC configured.

- <attr name="GLIDEIN_Singularity_Use" >This determines whether or not you want to mandate the use of Singularity. It affects both provisioning and the job on the site. Possible values are:

- DISABLE_GWMS (disable GWMS Singularity machinery, not to interfere with independent VO setups - this is the

efault) or - NEVER (do not use Singularity) or

- OPTIONAL or PREFERRED (use Singularity if the site is configured with it)

- or REQUIRED (always require Singularity).

See the section on how to use Singularity below for more on how to specify the wrapper, how to set the available images and select one. And see the custom variables file for a reference information on all the variables and more information about the format of the dictionary.

SINGULARITY_IMAGE_DEFAULT6, SINGULARITY_IMAGE_DEFAULT7 and SINGULARITY_IMAGE_DEFAULT are deprecated. These will be ignored in future versions. Currently these set the values of the 'rhel6', 'rhel7' and 'default' images respectively in SINGULARITY_IMAGES_DICT.

If you want to use your own wrapper script for singularity, you must first understand /var/lib/gwms-frontend/web-base/default_singularity_wrapper.sh and make your custom script compatible with this setup script.

The Frontend or a group can specify bind-mounts for Singularity images using GLIDEIN_SINGULARITY_BINDPATH or custom options. Again, you can fnid more in the Factory configuration document and the custom variables file.

Users can specify one or more of the following 3 lines in their job submit file:- If their jobs need a list of repositories, they must specify the list between double quotes, separated by commas, for example,

+CVMFSReposList = "cms.cern.ch,atlas.cern.ch".

If any of these cvmfs repositories is not availale, the user jobs will fail. - If they want their own singularity image, put the image file in /cvmfs/singularity.opensciencegrid.org/ and use:

+SingularityImage = "/cvmfs/singularity.opensciencegrid.org/PATH_NAME_TO_THE_IMAGE".

If users use this feature and if the Singularity image is not in the path indicated in the /cvmfs repository, the user job will error out. - If the users do not specify their own singularity image, the default singularity image will be used.

SINGULARITY_IMAGES_DICT will be searched for 'default', 'rhel7' and 'rhel6' images.

Users can select a specific image form SINGULARITY_IMAGES_DICT by using REQUIRED_OS:

+REQUIRED_OS = "rhel6".

If the users specify a value different from 'any' for REQUIRED_OS (e.g. rhel6, rhel7), the corresponding version of default Singularity image (the one with matching name in the dictionary) will be used. Independently from how an image was selected, if the chosen Singularity image is not available, the user job will fail. If the users specify REQUIRED_OS as 'any', the first available default Singularity image will be used and the user job will fail only if there is no default Singularity image.

- DISABLE_GWMS (disable GWMS Singularity machinery, not to interfere with independent VO setups - this is the

- <attr name="GLIDECLIENT_Rank" >This is a condor expression that allows the ranking and prioritization of jobs. This attribute is propagated to condor_vars.lst.

- <attr name="GLIDECLIENT_Start" >This is a condor expression that determines whether a glidein job will start on a resource. It is used for resource management by HTCondor.

- <attr name="GLIDEIN_MaxMemMBs_Estimate" >If set to TRUE, glidein will estimate the MEMORY that condor daemon can use based on memory/core or memory/cpu. Estimation only happens if the GLIDEIN_MaxMemMBs_Estimate is not configured by the Factory for the entry.

Other attributes can be specified as well. They are used by the VO Frontend matchmaking and job matchmaking. The format is similar to the attributes on the Factory config file. The table below describes the <attrs ... > tag in more detail.

Refer to description of Custom HTCondor Variables section for the list of available variables.

| Attribute Name |

Attribute Description |

| name |

Name of the attribute |

| value |

Value of the attribute |

| parameter |

Set to True if the attribute should be passed as a parameter. If set to False, the attribute will be put in the staging area to be accessed by the glidein startup scripts. Always set this to True unless you know what you are doing. See Note below for more details |

| glidein_publish |

If set to True, the attribute will be available in the condor_startd's ClassAd. Used only if parameter is True. |

| job_publish |

If set to True, the attribute will be available in the user job's environment. Used only if parameter is True. |

| comment |

You can specify description of the attribute here. |

| type |

Type of the attribute. Supported types are 'int', 'string' and 'expr'. Type expr is equivalent to condor constant/expression in condor_vars.lst |

An example attribute would be:

Note: The attribute name "publish" is not available for the Frontend for the moment. It's for the Factory though, as indicated in the attributes section here. Although, to pass the Frontend controlled values and make them available in the ClassAd and the glidein submission on the Factory, you can set the attribute parameter="True". These attributes will be distinguished in the glidein submit environment with the prefix "GLIDEIN_PARAM_".

The following group parameters are used to configure multiple Frontends. If only one group is specified, they apply to all Frontends. The objects specified are used for creating and monitoring glideins. Groups are used to group users with similar requirements, such as proxies, criteria for matching job requirements with sites, and configuration of glideins.

- <frontend><groups><group name="name" enabled="boolean" >This specifies the name of the group and whether it is enabled.

- <frontend><groups><group><config ignore_down_entries="boolean" >

The attribute ignore_down_entries determines the behavior of frontend groups when one of the factory entries is in downtime, i.e., the GLIDEIN_In_Downtime attribute is set for the entry. If ignore_down_entries is False or missing entirely, then the entry is considered for accounting purposes. This means that if there are 100 user jobs, and two entries A and B, and A is in downtime, then the frontend will request 50 idle jobs for B (and 50 will go in the downtime category). If ignore_down_entries is True instead, then A will be ignored and the frontend will request 100 jobs for B. This attribute can be set both in the global section and here in the group section. Group values overwrite global ones. If not set in the global section and here, the default value is False.

The elements listed here are parameters for a group section that regulate how aggressive this group should be in trying to provision glideins when it sees idle user jobs.

- The first element, idle_glideins_per_entry, defines the upper limit on how

aggressive the provisioning requests should be.

The number specified in max is the highest number of idle/pending glideins that the resource associated with any Factory entry will ever see. - The next element, idle_glideins_lifetime, determins how many seconds glideins will stay in the idle state in the Factory queue before they are automatically removed (through a periodic_remove expression)

- The next two are used to throttle the requests, if the provisioned glideins (counted as number of slots)

are not matched to the user jobs, either due to a mismatch in policy or system overload.

idle_vms_per_entry throttles based on a per Factory entry basis (e.g. Grid site),

while idle_vms_total throttles based on the states of all the glideins provisioned by this group.

The Frontend will stop requesting more glideins if/when max is reached. If/when curb is reached/exceeded, the number of glideins requested in each provisioning request is cut in half. - The next two elements are used to put an upper bound on how many glideins will the Frontend try to provision.

Similarly to the previoues two, running_glideins_per_entry will throttle the provisioning requests based on per Factory entry basis,

while running_glideins_total throttles based on number of all the glideins provisioned by this group. The min attribute of running_glideins_per_entry is the minimum number of glidens that are kept running for each entry associated to the group (even if there are no jobs idle). Keep in mind that if you use this min attribute and you also set idle_glideins_lifetime, then you might have old idle glideins removed and immeditely resubmitted. - The glideins_removal element is used to control the removal of glideins when they are not used. Glideins can expire and die when not used; the Frontend also has an automatic glidein removal mechanism, anyway you can trigger an early removal or stop all removals. Early removal is controlled by 4 attributes (type, requests_tracking, margin, wait) and is disabled bt default (type="NO"). type selects what to remove: NO (default) no early removal, IDLE only Idle glideins -not submitted-, WAIT also glideins waiting in the remote queues, ALL also running glideins (all glideins except the ones already staging output can be removed), DISABLE disables also the automatic removal mechanism of the Frontend (Glidein expiration is still in place). When requests_tracking is True and the current need for Glideins drops margin below the available Glideins, then the Glideins in excess are removed. If requests_tracking is False (default) then the Frontend removes glideins when there are no pending job requests for this group Either ways type still controls what to remove (and the default NO means no early removal). wait adds a delay, waits for N cycles without requests (or below the margin) before triggering the removal.

- The first element, idle_glideins_per_entry, defines the upper limit on how

aggressive the provisioning requests should be.

- <frontend><groups><group><match match_expr="expr" start_expr="expr" policy_file="/path/to/python-policy-file">The match_expr is Python boolean expression is used to match glideins to jobs. The glidein and job dictionaries are used in the expression, i.e. glidein["attrs"].get("GLIDEIN_Site") in ((job.get("DESIRED_Sites") != None) and job.get("DESIRED_Sites").split(",")). If you want to match all, just specify True. All HTCondor attributes used in this expression should be listed below in the match_attrs section.

The start_expr is a HTCondor expression that will be evaluated to match glideins to jobs. This should be a valid HTCondor ClassAd expression, i.e. (stringListMember(GLIDEIN_Site,DESIRED_Sites,",")=?=True).

policy_file lets you optionally configure Frontend or group's policy that includes factory_query_expr, factory_match_attrs, job_query_expr, job_match_attrs and the match_expr without having to enter similar information in the frontend.xml. When policy info is specified in both frontend.xml and through a policy file, information from both the sources is considered.- match_expr from frontend.xml is ANDed with match(job, glidein) from the policy file

- job/factory query_expr from the frontend.xml is ANDed with respective job/factory's query_expr from the policy file

- List of job/factory's match_attrs created by merging the list from both frontend.xml and policy file together

More on match expressions - <frontend><groups><group><match><factory query_expr="expr" >This is a HTCondor ClassAd expression to select Factory entry points. The expression will be evaluated by HTCondor and should be a valid HTCondor expression.

One example to select from entries that list CMS as a supported VO could be query_expr='stringListMember("CMS",GLIDEIN_Supported_VOs) && (GLIDEIN_Job_Max_Time=!=UNDEFINED)'

See the HTCondor manual for more on valid expressions and functions.

Note that if you specify a Factory constraint in the global default section as well as in the group Factory tag (such as <factory query_expr='EXPR1'>... <group> <factory query_expr='EXPR2'> </group>) then the Frontend will "AND" the expressions to create a combination (such as, EXPR1 && EXPR2). - <frontend><groups><group><match><factory><match attrs><match attr name="name" >A list of glidein Factory attributes used in the Factory match expression. Each match attribute should be a HTCondor ClassAd attribute that is listed in the above match_expr. More on match expressions

- <frontend><groups><group><match><job query_expr="expr" >This is a HTCondor ClassAd expression to select valid user jobs to find glideins for. It will be evaluated by HTCondor and should be a valid HTCondor expression. For instance, query_expr="(JobUniverse==5)" will select only vanilla universe jobs.

More on match expressions - <frontend><groups><group><match><job><match attrs><match attr name="name" >A list of glidein Factory attributes used in the Factory match expression. Each match attribute should be a HTCondor ClassAd attribute that is listed in the above match_expr. More on match expressions

- <frontend><groups><group><security><credentials><credential type="grid_proxy" absfname="proxyfile" [keyabsfname="keyfile"] [pilotabsfname="proxyfile"] security_class="classname" trust_domain="id" [vm_id="id"] [vm_type="type"] />Group-specific credentials can be defined that will be passed to the Factory in addition to any globally defined credentials.

- <frontend><groups><group> <attrs><attr name="attr_name" value="value" parameter="True" type="string" glidein_publish="boolean" job_publish="boolean" >Each group can have its own set of attributes, with the same semantics as the attrs section in the global area.

If an attribute is defined twice with the same name, the group one prevails. - <frontend><config ignore_down_entries="boolean">

The attribute ignore_down_entries in this element determines the default behavior of frontend groups when one of the factory entries is in downtime, i.e., the GLIDEIN_In_Downtime attribute is set for the entry. If ignore_down_entries is False or missing entirely, the entry is considered for accounting purposes. This means that if there are 100 user jobs, and two entries A and B, and A is in downtime, then by default frontend groups will request 50 idle jobs for B (and 50 will go in the downtime category). If ignore_down_entries is True instead, then A will be ignored and the frontend will request 100 jobs for B. This attribute can be set either here in the global section or in group sections. Group values override global ones. The default value in this section is False.

The elements listed here are parameters for this section that regulate the global throttling of the provisioning requests.

- The first two elements are used to throttle requests if the glideins (and startds in general)

are not matched to the user jobs, either due to a mismatch in policy or system overload (counted as number of slots)

idle_vms_total throttles based on the glideins provisioned by this Frontend,

while idle_vms_total_global throttles based on all the startds visible to the Frontend.

The Frontend will stop requesting more glideins if/when max is reached. If/when curb is reached/exceeded, the number of glideins requested in each provisioning request is cut in half. - The last two elements are used to put an upper bound on how many glideins will the Frontend try to provision.

Similarly to the previoues two, running_glideins_total will throttle the provisioning requests based on the number of glideins provisioned by this Frontend,

while running_glideins_total_global throttles based on the number of all the startds visible to the Frontend.

- The first two elements are used to throttle requests if the glideins (and startds in general)

are not matched to the user jobs, either due to a mismatch in policy or system overload (counted as number of slots)

- <frontend><collectors><collector DN="certificate DN" node="User Pool Collector" secondary="True/False" group="Group Name"/>List of user pool collector(s) where condor_startd started by glideins should report to as an available resource. This is where the user jobs will be matched with glidein resources.

For scalability purposes, multiple (secondary) user pool collectors can be used. Refer to the Advanced HTCondor Configuration Multiple Collectors document for details for the HTCondor configuration.

The configuration required for the Frontend to communicate with the secondary user pool collectors is shown below:<collectors> <collector DN="certificate DN" secondary="False" node="User Pool Collector:primary_port"/> <collector DN="certificate DN" secondary="True" node="User Pool Collector:secondary_port"/> If the secondary port numbers were assigned a contiguous range, only one additional collector is required specifying the port range (e.g.: 9640-9645) otherwise you will need a collector element for each secondary port.

For redundancy purposes, the user pool can be configured using the HTCondor High Availability feature. Refer to the Advanced HTCondor Configuration Multiple Collectors document for details for the HTCondor configuration.

The requires listing all the condor collectors, primary as well as secondary, grouped by group name. HTCondor daemons started by the glideins, will report back to one of the secondary collectors in each group, chosen by random. If a group has no secondary collector, the primary collector is selected for that group. Here there are examples of different configurations:<collectors>

-

<collector DN="certificate DN" secondary="False"

node="User Pool Collector 1:primary_port" group="default"/>

<collector DN="certificate DN" secondary="True"

node="User Pool Collector 1:secondary_port_range" group="default"/>

<collector DN="certificate DN" secondary="False"

node="User Pool Collector 2" group="1"/>

<collector DN="certificate DN" secondary="True"

node="User Pool Collector 2:secondary_port?sock=collectorID1-ID2" group="1"/>

Important: For the secondary collectors configuration, there are multiple options like port number or port range and

single collector (sock=collectorX), or sinful string that can be used for the sock range case

(sock=collectorID1-ID2) for shared_portb> option. This two last cases are further explained in

Advanced HTCondor Configuration Multiple Collectors document

It's very important to follow this configuration pattern. No coma or semicolon is allowed in the node specification.

If the secondary port numbers (or the numeric part at the end of the sock name) were assigned as contiguous range, only one additional collector line is required specifying the port range (e.g.: 9640-9645) or sock range (e.g. collector1-16), otherwise you will need a collector element for each secondary port or sock value. - <frontend><ccbs><ccb DN="certificate DN" node="CCB (Collector)" group="Group name" />List of HTCondor Connection Brokering, or CCB, servers. These are collector daemons that allow communications with HTCondor daemons behind a firewall. See the HTCondor manual for more information on CCB. A switch in the entry section of the Factory configuration determines wether the daemons started by Glideins on that entry will use CCBs or not.

Normally this section is empty and the main User Pool Collector acts also as CCB if needed. Only very specific setups need to change this section.

For scalability purposes, multiple CCB can be listed. For availability purposes divide them in multiple groups. Each Glidein will use only one CCB per group: it will receive a randomly shuffled list of CCBs with one entry chosen by HTCondor at random from each distinct group; When you configure a list of CCB servers to use, each daemon will register with all of the given CCB servers and include all of them in its sinful string (the information given to others for how to contact it). When a client tool or other daemon wishes to contact that daemon, it will try the CCB servers in random order until it gets a successful connection. For more details, ( see the HTCondor manual). Daemons on the same host using a shared port daemon will share the same CCBs.

NOTE that there is no correlation between these groups and the collector groups and HTCondor has no way to set any affinity (i.e. for this collector use this CCB close by). When no group is specified "default" is assumed.

The configuration required for the Frontend and Glideins to communicate using CCB collectors is shown below:<ccbs> <ccb DN="certificate DN" node="CCB Collector:port"/> <ccb DN="certificate DN" node="CCB Collector:port_range"/> <ccb DN="certificate DN" node="CCB Collector:other_port?sock=collectorID1-ID2"/> Important: Like in User Pool Collector configuration, there are multiple options of configuration: a single host name, a port range, sinful string (shared_port configuration) with a single collector ID (sock=collectorX), or with a "sock range" (sock=collectorID1-ID2). For shared_port, the configuration is further explained in: Advanced HTCondor Configuration Multiple Collectors document It's very important to follow this configuration pattern. No coma or semicolon is allowed in the node specification.

-

<frontend><high_availability check_interval="Check interval in sec" enabled="True/False"/>This setting allows you to run Frontend in HA mode. Currently only 1 master, 1 slave is supported. By default, the Frontend runs in master mode ie. enabled="False".

To configure the Frontend as slave, set enabled="True". Slave will check the WMS Collector for the ClassAds from the master Frontend every few second specified in the check_interval. The frontend_name setting in the example below is in the name of the master Frontend ie. 'FrontendName' as it appears in the glideclient ClassAd of the master Frontend or the value of frontend_name configuration for the master Frontend. When the slave detects that master has stopped advertising, it will take over and start advertising the requests. You can use same frontend_name for both master and slave Frontend to make it transparent to other services, however you can still distinguish which Frontend is currently active by looking at the 'FrontendHAMode' in the glideclient ClassAd.<high_availability check_interval="300" enabled="True"> <ha_frontends> <ha_frontend frontend_name="vofrontend-v2_4"/> </ha_frontends> </high_availability>

Adding Custom Code/Scripts to Glidein Frontend Glideins

You can add custom scripts to glideins created for this Glidein Frontend by adding scripts and files to the configuration in the files section:[<groups><group>]

<files>

<file absfname="script name" executable="True" after_group="True" comment="comment"/>

The script/file will be copied to the Web-accessible area and added to one of the glidein's file lists, and when a glidein starts, the glidein startup script will pull it, validate it and execute i any action requested (execute, untar, just keep it, ...). after_entry and after_group can be used to affect the execution order (see writing custom scripts. If any parameters are needed, they can be specified using <attr />.

For more detailed information, see the page dedicated to writing custom scripts.

You can also create wrapper scripts or tar-balls of files, see the Factory configuration page for syntax. (Use groups/group tags instead of the Factory's entry tag). The variable name specified by absdir_outattr will be prepended by either GLIDECLIENT_ or GLIDECLIENT_GROUP_, depending on scope.

Match Expressions and Match Attributes

Several sections in the configuration allow a match expression.

Each of these sections allows an expression to be evaluated to

determine where glideins and jobs should be matched.

For example, expressions allowing a white list by the frontend

can be created in order to control where the glideins are submitted.

It can also allow you to give a HTCondor expression

to specify where jobs can run or to specify

which glidein_sites can run jobs.

There are two ways to restrict matching in most cases.

Note that match_expr clauses, such as <match match_expr>

will use python based expressions as explained below. Others,

such as <factory query_expr> and <job query_expr>

use HTCondor ClassAd expressions. For these, only valid HTCondor

expressions can be used. Python expressions can not be

evaluated in these contexts.

Note that, for some tags (like

factory query_expr), you can specify expressions in both the

default global section as well as in individual group sections.

You should take special care before doing this to make sure the

expressions are correct, as the expressions are typically "AND"-ed

together.

Each match expression is a python expression that will be evaluated. Matches can be scoped to either global scope (<frontend><match>) or to a group specific scope.

Each python expression will typical be a series of boolean tests, surrounded by parentheses and connected by the boolean expressions "and", "or", and "not". You can use several dictionaries in these match expressions. The "job" dictionary contains the ClassAd of the job being matched, and the "glidein" dictionary contains information about the Factory (entry point) ClassAd. There is also a "attr_dict" dictionary that can reference attributes defined in the frontend.xml. While an extensive list of everything you can in these expressions is out of scope, some examples are below:

- (job.has_key("ImageSize")): Returns true if the job ClassAd has the attribute "ImageSize".

- (job["NumJobStarts"]>5): Returns true if the job ClassAd attribute "NumJobStarts" is greater than 5.

- (glidein["attrs"].has_key("GLIDEIN_Retire_Time")): Returns true if the Factory entry ClassAd has the attribute "GLIDEIN_Retire_Time".

- (glidein["attrs"]["GLIDEIN_Retire_Time"]>21600): Returns true if the Factory entry ClassAd's "GLIDEIN_Retire_Time" is greater than 21600.

- (attr_dict["NUM_USERS_ALLOWED"]>0): Returns true if there is a attribute in the frontend.xml with NUM_USERS_ALLOWED that is greater than zero.

- (job["Owner"] in attr_dict["ITB_Users"].split(",")): Returns true if the job ClassAd attribute "Owner" is in the comma-delimited string attribute ITB_USERS (which would be defined in frontend.xml)

Each attribute used in a match expression should be declared in a subsequent match_attrs section. This makes ClassAd variables available to the match expression. Attributes can be made available from the:

- Factory ClassAd: (<match><factory><match_attr>)

- Job ClassAd: (<match><job><match_attr>)

Each match_attr tag must contain a name.

This is the name of the attribute

in the appropriate ClassAd.

It must also contain a type which can be

one of the following:

- string: A constant string of letters, numbers, or characters.

- int: An integer: a positive or negative number, or zero.

- real: A real number that could have decimal places

- bool: It can by "True" or "False"

- Expr: A ClassAd expression

Example

<factory query_expr="(GLIDEIN_Site=!=UNDEFINED)">

<match_attrs> <match_attr name="GLIDEIN_Site" type="string"/> </match_attrs>

<collectors> </collectors>

</factory>

<job query_expr="(DESIRED_Sites=!=UNDEFINED)">

<match_attrs> <match_attr name="DESIRED_Sites" type="string"/> </match_attrs>

<schedds> </schedds>

</job>

</match>

Example

Glideins can also use "start_expr" to make sure the correct jobs start on pilots. This is a HTCondor expression run on the pilot startd daemon. Here is an example:...

</match >

Example

Here is an example of policy.py that is equivalent to above example<factory query_expr="True"> <match_attrs /> </factory>

<job query_expr="True"> <match_attrs /> </job>

</match >

# Filename: /path/to/policy.py

# match(job, glidein) is equivalent to match_expr in frontend.xml

def match(job, glidein):

return (glidein['attrs'].get('GLIDEIN_Site') in job["DESIRED_Sites"].split(","))

# factory/job query_expr is a string of HTCondor expression

factory_query_expr = '(GLIDEIN_Site=!=UNDEFINED)'

job_query_expr = '(DESIRED_Sites=!=UNDEFINED)'

# factory/job match_attrs is a dict with following structure

factory_match_attrs = {

'GLIDEIN_Site': {'type': 'string', 'comment': 'From policy'}

}

job_match_attrs = {

'DESIRED_Sites': {'type': 'string', 'comment': 'From policy'}

}

Using Multiple Proxies

Why would you want to use a pool of pilot proxies instead of a single one?

If your VO maps to a single group account at the remote grid sites, you wouldn't. A pool of pilot proxies (try saying that 5 times fast) does not gain you anything. If your VO maps to a pool of accounts at remote grid sites, you should consider using a pool of proxies equivalent to the number of users you have. Why?

Consider the following scenario: Alice, Bob, and Charlie are all in the FUNGUS experiment and form a VO. They are using GlideinWMS. Alice sends 1000 jobs to FNAL via their GlideinWMS using a single pilot proxy. The pilots map to their userid fungus01 at FNAL, and in accordance with the batch system's fair-share policies, the job priority for user fungus01 is decreased significantly.

Bob comes along and submits 1000 jobs via GlideinWMS, while Charlie submits 1000 jobs under his own proxy and not using GlideinWMS. The GlideinWMS pilots launch for Bob, and map to fungus01. Charlie launches his own jobs that get mapped to fungus02. Relative to fungus02, fungus01 priority is terrible, and Bob's jobs sit around waiting for Charlie -- even though Bob didn't occupy the FNAL resources, Alice did!

The solution: have a pool of pilot proxies. We then spread the fair-share penalty amongst fungus01, fungus02, and fungus03, and Bob now can compete on a more equal footing with Charlie and Alice.

Using multiple proxies

Proxies can be specified in the <security><credentials><credential> tags. Multiple proxy tags can be entered, one for each proxy file. These can found in the security section at the top of the xml, in which case, the proxies are shared for all security groups. They can also be found within <group> tags, in which case they are used only by that security group.

One example follows:

<security>

<credentials><security>

<credential type="grid_proxy" trust_domain="OSG" absfname="/home/frontend/.globus/x509_pilot05_cms_prio.proxy" security_class="cmsprio"/><proxies>

<credential type="grid_proxy" trust_domain="OSG" absfname="/home/frontend/.globus/x509_pilot06_cms_prio.proxy" security_class="cmsprio"/>

<credential type="grid_proxy" trust_domain="OSG" absfname="/home/frontend/.globus/x509_pilot07_cms_prio.proxy" security_class="cmsprio"/>

<credential type="grid_proxy" trust_domain="OSG" absfname="/home/frontend/.globus/x509_pilot08_cms_prio.proxy" security_class="cmsprio"/>

<credential type="grid_proxy" trust_domain="OSG" absfname="/home/frontend/.globus/x509_pilot09_cms_prio.proxy" security_class="cmsprio"/>

Run jobs under Singularity

As mentionsd above, GLIDEIN_Singularity_Use determines whether or not you want to mandate the use of Singularity (via GlideinWMS) and is used both for provisioning and to match and run once the Glidein is on the site. To use Singularity you have to set it to one of REQUIRED, PREFERRED or OPTIONAL, e.g.:

<attr name="GLIDEIN_Singularity_Use" glidein_publish="True" job_publish="True" parameter="False" type="string" value="PREFERRED"/>The Factory setting and the actual availability of singularity and an image will also affect the actual use of Singularity. See the Factory configuration document for a table of how Singularity is negotiated with the entries using GLIDEIN_Singularity_Use and GLIDEIN_SINGULARITY_REQUIRE (the entry variable) to decide wether the Glidein can run there and should use Singularity or not.

To use Singularity you must also (in the general or group configuration):

- Use the default wrapper script that we provide (or a similar one). In other words a line like:

<file absfname="/var/lib/gwms-frontend/web-base/frontend/default_singularity_wrapper.sh" wrapper="True"/>

must be added in the <files> section of the general or group parts of the Frontend configuration. NOTE: Remember to remove your previous VO wrapper if you had one, e.g. if you were using Singularity via OSG - Specify an image. You can specify one single image or a dictionary of images, SINGULARITY_IMAGES_DICT, and pick one with the variable REQUIRED_OS (set in the job of in the Frontend group).

If you use SINGULARITY_IMAGES_DICT and REQUIRED_OS, the value of REQUIRED_OS must be 'any' or one of the keys used in the dictionary.

To specify the image dictionary you have a couple of options:

- 2a. run a pre-singularity script like generic_pre_singularity_setup.sh from /var/lib/gwms-frontend/web-base/frontend/,

(or a similar one you did for your VO). To do this add a line such as:

<file absfname="/var/lib/gwms-frontend/web-base/frontend/generic_pre_singularity_setup.sh" after_entry="False" executable="True" period="0" untar="False" wrapper="False"/>

in the <files> section as before. This script sets a SINGULARITY_IMAGES_DICT with the OSG images in CVMFS for "rhel7" and "rhel6". You can select one of them by setting REQUIRED_OS to one of the keys. "rhel7" is the default. - 2b. You can set directly SINGULARITY_IMAGES_DICT in your group attributes adding a line like:

<attr name="SINGULARITY_IMAGES_DICT" glidein_publish="True" job_publish="True" parameter="False" type="string" value="default:/cvmfs/singularity.opensciencegrid.org/opensciencegrid/osgvo:el6,rhel7:/cvmfs/singularity.opensciencegrid.org/opensciencegrid/osgvo:el7"/>

Then you can use REQUIRED_OS to select one of the images. - 2c. You can ask the Factory to set SINGULARITY_IMAGES_DICT for you and use REQUIRED_OS to select the image.

- 2d. You set SingularityImage in the job submit file, e.g:

+SingularityImage = "/cvmfs/singularity.opensciencegrid.org/PATH_NAME_TO_THE_IMAGE"

- 2a. run a pre-singularity script like generic_pre_singularity_setup.sh from /var/lib/gwms-frontend/web-base/frontend/,

(or a similar one you did for your VO). To do this add a line such as:

- Specify bind-mounts for Singularity images using GLIDEIN_SINGULARITY_BINDPATH. These bind-mounts override the ones listed by the Factory in GLIDEIN_SINGULARITY_BINDPATH_DEFAULT.

If one or more of the specified paths does not exist on the node, it will be removed from the list. Here an example:

<attr name="GLIDEIN_SINGULARITY_BINDPATH" const="False" glidein_publish="True" job_publish="True" parameter="True" publish="True" type="string" value="/vo_files,/src_path:/dst_path"/>.

See the custom variables file for more information about the bind mounts. - Specify additional options for the singularity command, using the attribute GLIDEIN_SINGULARITY_OPTS

Starting a Glidein Frontend Daemon

Once you have the desired configuration file, move to the VO Frontend directory and launch the command:

./frontend_startup start

With the configutation above, all the activity messages will go into

group_*/log/frontend_info.<date>.log

while the debug, warning and error messages go into

group_*/log/frontend_debug.<date>.log