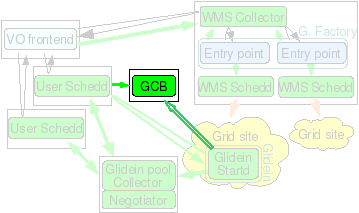

Components

Advanced Condor Configuration

Multiple Schedds

The installation will assume you have installed Condor v7.0.5+.

For the purposes of the examples shown here the Condor install location is

shown as /opt/glideincondor.

The working directory is

/opt/glidecondor/condor_local and the machine name is

mymachine.fnal.gov.

If you want to use a different setup, make the necessary changes.

Note: If you specified any of these options using the glideinWMS configuration based installer, these files and initialization steps will already have been performed. These instructions are relevant to any post-installation changes you desire to make.

Unless explicity mentioned, all operations are to be done by the user that you installed Condor as.

Increase the number of available file descriptors

When using multiple schedds, you may want to consider increasing the available file descriptors. This can be done by issuing a "ulimit -n" command as well as changing the values in the /etc/security/limits.conf file

Using the condor_shared_port feature

The Condor shared_port_daemon is available in Condor 7.5.3+.

glideinWMS V2.5.2+

Additional information on this daemon can be found here:-

Condor manual 3.1.2 The Condor Daemons

Condor manuel 3.7.2 Reducing Port Usage with the condor_shared_port Daemon

Your /opt/glidecondor/condor_config.d/02_gwms_schedds.config will need to contain the following attributes. Port 9615 is the default port for the schedds.

#-- Enable shared_port_daemonNote: Both the SCHEDD and SHADOW processes need to specify the shared port option is in effect.

SHADOW.USE_SHARED_PORT = True

SCHEDD.USE_SHARED_PORT = True

SCHEDD.SHARED_PORT_ARGS = -p 9615

DAEMON_LIST = $(DAEMON_LIST), SHARED_PORT

glideinWMS V2.5.1 and earlier

Additional information on this daemon can be found here:-

Condor manual 3.1.2 The Condor Daemons

Condor manuel 3.7.2 Reducing Port Usage with the condor_shared_port Daemon

If you are using this feature, there are 3 additional variables that must be added to the schedd setup script described in the create setup files section:

_CONDOR_USE_SHARED_PORT _CONDOR_SHARED_PORT_DAEMON_AD_FILE _CONDOR_DAEMON_SOCKET_DIRIn addition, your /opt/glidecondor/condor_local/condor_config.local will need to contain the following attributes. Port 9615 is the default port for the schedds.

#-- Enable shared_port_daemonNote: Both the SCHEDD and SHADOW processes need to specify the shared port option is in effect.

SHADOW.USE_SHARED_PORT = True

SCHEDD.USE_SHARED_PORT = True

SCHEDD.SHARED_PORT_ARGS = -p 9615

DAEMON_LIST = $(DAEMON_LIST), SHARED_PORT

Multiple Schedds in glideinWMS V2.5.2+

The following needs to be added to your Condor config file for each additional schedd desired. Note the numeric suffix used to distinguish each schedd.If the multiple schedds are being used on your WMS Collector, Condor-G is used to submit the glidein pilot jobs and the SCHEDD(GLIDEINS/JOBS)2_ENVIRONMENT attribute shown below is required. If not, then it shuold be omitted.

For the WMS Collector:

SCHEDDGLIDEINS2 = $(SCHEDD)

SCHEDDGLIDEINS2_ARGS = -local-name scheddglideins2

SCHEDD.SCHEDDGLIDEINS2.SCHEDD_NAME = schedd_glideins2

SCHEDD.SCHEDDGLIDEINS2.SCHEDD_LOG = $(LOG)/SchedLog.$(SCHEDD.SCHEDDGLIDEINS2.SCHEDD_NAME)

SCHEDD.SCHEDDGLIDEINS2.LOCAL_DIR = $(LOCAL_DIR)/$(SCHEDD.SCHEDDGLIDEINS2.SCHEDD_NAME)

SCHEDD.SCHEDDGLIDEINS2.EXECUTE = $(SCHEDD.SCHEDDGLIDEINS2.LOCAL_DIR)/execute

SCHEDD.SCHEDDGLIDEINS2.LOCK = $(SCHEDD.SCHEDDGLIDEINS2.LOCAL_DIR)/lock

SCHEDD.SCHEDDGLIDEINS2.PROCD_ADDRESS = $(SCHEDD.SCHEDDGLIDEINS2.LOCAL_DIR)/procd_pipe

SCHEDD.SCHEDDGLIDEINS2.SPOOL = $(SCHEDD.SCHEDDGLIDEINS2.LOCAL_DIR)/spool

SCHEDD.SCHEDDGLIDEINS2.SCHEDD_ADDRESS_FILE = $(SCHEDD.SCHEDDGLIDEINS2.SPOOL)/.schedd_address

SCHEDD.SCHEDDGLIDEINS2.SCHEDD_DAEMON_AD_FILE = $(SCHEDD.SCHEDDGLIDEINS2.SPOOL)/.schedd_classad

SCHEDDGLIDEINS2_LOCAL_DIR_STRING = "$(SCHEDD.SCHEDDGLIDEINS2.LOCAL_DIR)"

SCHEDD.SCHEDDGLIDEINS2.SCHEDD_EXPRS = LOCAL_DIR_STRING

SCHEDDGLIDEINS2_ENVIRONMENT = "_CONDOR_GRIDMANAGER_LOG=$(LOG)/GridManagerLog.$(SCHEDD.SCHEDDGLIDEINS2.SCHEDD_NAME).$(USERNAME)"

DAEMON_LIST = $(DAEMON_LIST), SCHEDDGLIDEINS2

DC_DAEMON_LIST = + SCHEDDGLIDEINS2

For the Submit:

SCHEDDJOBS2 = $(SCHEDD)

SCHEDDJOBS2_ARGS = -local-name scheddglideins2

SCHEDD.SCHEDDJOBS2.SCHEDD_NAME = schedd_glideins2

SCHEDD.SCHEDDJOBS2.SCHEDD_LOG = $(LOG)/SchedLog.$(SCHEDD.SCHEDDJOBS2.SCHEDD_NAME)

SCHEDD.SCHEDDJOBS2.LOCAL_DIR = $(LOCAL_DIR)/$(SCHEDD.SCHEDDJOBS2.SCHEDD_NAME)

SCHEDD.SCHEDDJOBS2.EXECUTE = $(SCHEDD.SCHEDDJOBS2.LOCAL_DIR)/execute

SCHEDD.SCHEDDJOBS2.LOCK = $(SCHEDD.SCHEDDJOBS2.LOCAL_DIR)/lock

SCHEDD.SCHEDDJOBS2.PROCD_ADDRESS = $(SCHEDD.SCHEDDJOBS2.LOCAL_DIR)/procd_pipe

SCHEDD.SCHEDDJOBS2.SPOOL = $(SCHEDD.SCHEDDJOBS2.LOCAL_DIR)/spool

SCHEDD.SCHEDDJOBS2.SCHEDD_ADDRESS_FILE = $(SCHEDD.SCHEDDJOBS2.SPOOL)/.schedd_address

SCHEDD.SCHEDDJOBS2.SCHEDD_DAEMON_AD_FILE = $(SCHEDD.SCHEDDJOBS2.SPOOL)/.schedd_classad

SCHEDDJOBS2_LOCAL_DIR_STRING = "$(SCHEDD.SCHEDDJOBS2.LOCAL_DIR)"

SCHEDD.SCHEDDJOBS2.SCHEDD_EXPRS = LOCAL_DIR_STRING

DAEMON_LIST = $(DAEMON_LIST), SCHEDDJOBS2

DC_DAEMON_LIST = + SCHEDDJOBS2

The directories files will need to be created for the attributes by these attribtues defined above:

LOCAL_DIR

EXECUTE

SPOOL

LOCK

A script is available to do this for you given the attributes are defined with the naming convention shown. If they already exist it will verify their existance and ownership. If they do not exist, they will be created.

source /opt/glidecondor/condor.sh

GLIDEINWMS_LOCATION/install/services/init_schedd.sh

(sample output)

Validating schedd: SCHEDDJOBS2

Processing schedd: SCHEDDJOBS2

SCHEDD.SCHEDDJOBS2.LOCAL_DIR: /opt/glidecondor/condor_local/schedd_jobs2

... created

SCHEDD.SCHEDDJOBS2.EXECUTE: /opt/glidecondor/condor_local/schedd_jobs2/execute

... created

SCHEDD.SCHEDDJOBS2.SPOOL: /opt/glidecondor/condor_local/schedd_jobs2/spool

... created

SCHEDD.SCHEDDJOBS2.LOCK: /opt/glidecondor/condor_local/schedd_jobs2/lock

... created

Multiple Schedds in glideinWMS V2.5.1

Create setup files

If not already created during installation, you will need to create a set of files to support multiple schedds. This describes the steps necessary.

/opt/glidecondor/new_schedd_setup.sh

(example new_schedd_setup.sh)

1. adds the necessary attributes when the schedds are initialized and

started.

if [ $# -ne 1 ]

then

echo "ERROR: arg1 should be schedd name"

return 1

fi

LD=`condor_config_val LOCAL_DIR`

export _CONDOR_SCHEDD_NAME=schedd_$1

export _CONDOR_MASTER_NAME=${_CONDOR_SCHEDD_NAME}

# SCHEDD and MASTER names MUST be the same (Condor requirement)

export _CONDOR_DAEMON_LIST="MASTER, SCHEDD,QUILL"

export _CONDOR_LOCAL_DIR=$LD/$_CONDOR_SCHEDD_NAME

export _CONDOR_LOCK=$_CONDOR_LOCAL_DIR/lock

#-- condor_shared_port attributes ---

export _CONDOR_USE_SHARED_PORT=True

export _CONDOR_SHARED_PORT_DAEMON_AD_FILE=$LD/log/shared_port_ad

export _CONDOR_DAEMON_SOCKET_DIR=$LD/log/daemon_sock

#------------------------------------

unset LD

The same file can be downloaded from example-config/multi_schedd/new_schedd_setup.sh .

/opt/glidecondor/init_schedd.sh

(example init_schedd.sh)

1. This script creates the necessary directories and files for the additional schedds.

It will only be used to initialize a new secondary schedd.

(see the initialize schedds section)

#!/bin/sh2. This needs to be made executable by the user that installed Condor:

CONDOR_LOCATION=/opt/glidecondor

script=$CONDOR_LOCATION/new_schedd_setup.sh

source $script $1

if [ "$?" != "0" ];then

echo "ERROR in $script"

exit 1

fi

# add whatever other config you need

# create needed directories

$CONDOR_LOCATION/sbin/condor_init

chmod u+x /opt/glidecondor/init_schedd.shchmod a+x /opt/glidecondor/init_schedd.sh

The same file can be downloaded from example-config/multi_schedd/init_schedd.sh .

/opt/glidecondor/start_master_schedd.sh

(example start_master_schedd.sh)

1. This script is used to start the secondary schedds

(see the starting up schedds section)

#!/bin/sh CONDOR_LOCATION=/opt/glidecondor/condor-submit export CONDOR_CONFIG=$CONDOR_LOCATION/etc/condor_config source $CONDOR_LOCATION/new_schedd_setup.sh $1 # add whatever other config you need $CONDOR_LOCATION/sbin/condor_master2.- This needs to be made executable by the user that installed Condor:

chmod u+x /opt/glidecondor/start_master_schedd.shThe same file can be downloaded from example-config/multi_schedd/start_master_schedd.sh .

Initialize schedds

To initialize the secondary schedds, use /opt/glidecondor/init_schedd.sh created above.

If you came here from another document, make sure you configure the schedds specified there.

For example, supposing you want to create schedds named schedd_jobs1, schedd_jobs2 and schedd_glideins1, you would run:

/opt/glidecondor/init_schedd.sh jobs1

/opt/glidecondor/init_schedd.sh jobs2

/opt/glidecondor/init_schedd.sh glideins1

Starting up schedds

If you came to this document as part of another installation, go back and follow those instructions.

Else, when you are ready, you can start the schedd by running /opt/glidecondor/start_master_schedd.sh created above.

For example, supposing you want to start schedds named schedd_jobs1, schedd_jobs2 and schedd_glideins1, you would run:

/opt/glidecondor/start_master_schedd.sh jobs1Note: Always start them after you have started the Collector.

/opt/glidecondor/start_master_schedd.sh jobs2

/opt/glidecondor/start_master_schedd.sh glideins1

Submission and monitoring

The secondary schedds can be seen by issuing

condor_status -scheddTo submit or query a secondary schedd, you need to use the -name options, like:

condor_submit -name schedd_jobs1@ job.jdl

condor_q -name schedd_jobs1@

Multiple Collectors

This section will describe the steps (configuration) necessary to add additional (secondary) Condor collectors for the WMS and/or User Collectors.

Note: If you specified any of these options using the glideinWMS configuration based installer, these files and initialization steps will already have been performed. These instructions are relevant to any post-installation changes you desire to make.

Important: When secondary (additional) collectors are added to either the WMS Collector or User Collector, changes must also be made to the VOFrontend configurations so they are made aware of them.

Condor configuration changes

For each secondary collector, the following Condor attributes are required:

COLLECTORnn = $(COLLECTOR) COLLECTORnn_ENVIRONMENT = "_CONDOR_COLLECTOR_LOG=$(LOG)/CollectornnLog" COLLECTORnn_ARGS = -f -p port_number DAEMON_LIST = $(DAEMON_LIST), COLLECTORnn

In the above example, n is an arbitrary value to uniquely identify each secondary collector. Each secondary collector must also have a unique port_number.

After these changes have been made in your Condor configuration file, restart Condor to effect the change. You will see these collector processes running (example has 5 secondary collectors).

user 17732 1 0 13:34 ? 00:00:00 /usr/local/glideins/separate-no-privsep-7-6/condor-userpool/sbin/condor_master user 17735 17732 0 13:34 ? 00:00:00 condor_collector -f primary user 17736 17732 0 13:34 ? 00:00:00 condor_negotiator -f user 17737 17732 0 13:34 ? 00:00:00 condor_collector -f -p 9619 secondary user 17738 17732 0 13:34 ? 00:00:00 condor_collector -f -p 9620 secondary user 17739 17732 0 13:34 ? 00:00:00 condor_collector -f -p 9621 secondary user 17740 17732 0 13:34 ? 00:00:00 condor_collector -f -p 9622 secondary user 17741 17732 0 13:34 ? 00:00:00 condor_collector -f -p 9623 secondary

VOFrontend configuration changes for additional WMS Collectors

For each additional collector added to the WMS Collector, a collector element must be added to the frontend.xml configuration file. When complete, you must reconfigure the VOFrontend to effect the change.

<factory ... ></match><collectors></factory> :<collector DN="WMS_COLLECTOR_DN" comment="Primary WMS/Factory collector"factory_identity=" " my_identity=" " node="WMS_COLLECTOR_NODE:primary_port_number"/><collector DN="WMS_COLLECTOR_DN" comment="Secondary WMS/Factory collector"factory_identity=" " my_identity=" " node="WMS_COLLECTOR_NODE:secondary_port_number"/>:</collectors>

:</frontend>

VOFrontend configuration changes for additional User Collectors

In the VOFrontend configuration, there are 2 ways of specifying the secondary User Collectors depending on how the ports were assigned:

- If the port numbers were assigned a contiguous range, only one additional collector is required specifying the port range (e.g.- 9640:9645).

- If the port numbers were not assigned a contiguous range, then an additional collector element is required for each secondary collector.

:

<collectors></frontend><collector DN="USER_COLLECTOR_DN" secondary="False"node="USER_COLLECTOR_NODE:primary_port_number"/>If a contiguous range of ports was assigned, then just 1 collector element is required specifying the range .... <collector DN="USER_COLLECTOR_DN" secondary="True"node="USER_COLLECTOR_NODE:secondary_port_start:secondary_port_end" />If a contiguous range of ports was not assigned, then a collector element is required for each secondary port .... <collector DN="USER_COLLECTOR_DN" secondary="True"node="USER_COLLECTOR_NODE:secondary_port"/>:</collectors>:

GCB Installation

NOTE: It is strongly recommended to use CCB available in new version of condor over GCB. GCB is no longer supported in Condor 7.6 and following. CCB provides same functionality as GCB and has performance benifits. Using GCB requires additional installation of condor daemon whereas this feature is integrated in newer versions of condor.

This node will serveas a Generic Connection Brokering (GCB) node. If you are working over firewalls or NATs, and are using an older version of condor (before v7.3.0) you will need one or more of these. If in use, GCB is needed every time you have a firewall or a NAT. If this node dies, all the glideins relying on it will die with it. If possible use CCB instead.

Hardware requirements

This machine needs a reasonably recent CPU and a small amount of memory (256MB should be enough).

It must have a reliable network connectivity and must be on the public internet, with no firewalls. It will work as a router. It will use 20k IP ports, so it should not be collocated with other network intensive applications.

The machine must be very stable. If the GCB dies, all the glideins relying on it will die with it.. (Multiple GCBs can improve this by minimizing the damage of a downtime, but this machine should still be on the most stable machine affordable).

About 5GB of disk space is needed for Condor binaries and log files.

As these specifications are not disk/memory intensive, you may consider collocating it with a Glidein Frontend.

Needed software

You will need a reasonably recent Linux OS (SL4 used at press time), and the Condor distribution.

Installation instructions

The GCB should be installed as a non privileged user.

The whole process is managed by a install script described below. You will need to provide a valid Condor tarball, so you should download it before starting the installer.

Move into the "glideinWMS/install" directory and execute

./glideinWMS_installYou will be presented with this screen:

What do you want to install?Select 3. Now follow the instructions. The installation is straightforward. The installer will also start the Condor daemons.

(May select several options at one, using a , separated list)

[1] glideinWMS Collector

[2] Glidein Factory

[3] GCB

[4] pool Collector

[5] Schedd node

[6] Condor for Glidein Frontend

[7] Glidein Frontend

[8] Components

Starting and Stopping

To start the Condor daemons, issue:

cd <install dir>

./start_condor.sh

To stop the Condor daemons, issue:

killall condor_master

Verify it is running

You can check that the processes are running:

ps -u `id -un`|grep gcbYou should see one gcb_broker and at least one gcb_relay_server.

You can also check that they are working by pinging it with gcb_broker_query:

<install dir>/sbin/gcb_broker_query <your_ip> freesockets

Fine tuning

Increase the number of available ports

The default installation will set up GCB to handle up to 20k requests. Look in the <install dir>/etc/condor_config.local for

GCB_MAX_RELAY_SERVERS=200This is enough for approximately 4000 glideins (each glidein uses 5-6 ports).

GCB_MAX_CLIENTS_PER_RELAY_SERVER=100

If you want a single GCB to serve more glideins that that, you can increase those numbers. However, be aware that the OS also has its limits. On most Linux systems, the limit is set in /proc/sys/net/ipv4/ip_local_port_range.

$ cat /proc/sys/net/ipv4/ip_local_port_rangeFor example, the typical port range listed above has only ~28k ports available. If you want to configure GCB/CCB to serve more than that, first change the system limit, then the GCB/CCB configuration.

32768 61000

Increase the number of available file descriptors

Note that every port used by the GCB/CCB also consumes available file descriptors. The default number of file descriptors per process is 1024 on most systems. Increase this limit to ~16k or value higher than number of ports GCB/CCB is allowed to open.

This can be done by issuing a "ulimit -n" command as well as changing the values in the /etc/security/limits.conf file

Installing Quill

Required software

- A reasonably recent Linux OS (SL4 used at press time).

- A PostgreSQL server.

-

The

Condor distribution.

Installation instructions

The installation will assume you have installed Condor v7.0.5 or newer.

The install directory is /opt/glidecondor, the working directory is /opt/glidecondor/condor_local and the machine name is mymachine.fnal.gov. and its IP 131.225.70.222.

If you want to use a different setup, make the necessary changes.

Unless explicity mentioned, all operations are to be done as root.

Obtain and install PostgreSQL RPMs

Most Linux distributions come with very old versions of PostgreSQL, so you will want to download the latest version.

The RPMs can be found on http://www.postgresql.org/ftp/binary/

At the time of writing, the latest version is v8.2.4, and the RPM files to install are

postgresql-8.2.4-1PGDG.i686.rpm

postgresql-libs-8.2.4-1PGDG.i686.rpm

postgresql-server-8.2.4-1PGDG.i686.rpm

Initialize PostgreSQL

Switch to user postgres:

su - postgresAnd initialize initialize the database with:

initdb -A "ident sameuser" -D /var/lib/pgsql/data

Configure PostgreSQL

PostgreSQL by default only accepts local connections., so you need to configure it in order for Quill to use it.

Please do it as user postgres.

To enable TCP/IP traffic, you need to change

listen_addresses in /var/lib/pgsql/data/postgresql.conf to:

# Make it listen to TCP ports

listen_addresses = '*'

Moreover, you need to specify which machines will be able to access it.

Unless you have strict security policies forbiding this, I recommend enabling

read access to the whole world by adding the following line

to /var/lib/pgsql/data/pg_hba.conf:

host all quillreader 0.0.0.0/0 md5On the other hand, we want only the local machine to be able to write the database. So, we will add to /var/lib/pgsql/data/pg_hba.conf:

host all quillwriter 131.225.70.222/32 md5

Start PostgreSQL

To start PostgreSQL, just run:/etc/init.d/postgresql startThere should be no error messages.

Initalize Quill users

Switch to user postgres:su - postgresAnd initialize initialize the Quill users with:

createuser quillreader --no-createdb --no-adduser --no-createrole --pwprompt

# passwd reader

createuser quillwriter --createdb --no-adduser --no-createrole --pwprompt

# password <writer passwd>

psql -c "REVOKE CREATE ON SCHEMA public FROM PUBLIC;"

psql -d template1 -c "REVOKE CREATE ON SCHEMA public FROM PUBLIC;"

psql -d template1 -c "GRANT CREATE ON SCHEMA public TO quillwriter; GRANT USAGE ON SCHEMA public TO quillwriter;"

Configure Condor

Append the following lines to /opt/glidecondor/etc/condor_config:#############################In /opt/glidecondor/condor_local/condor_config.local, add QUILL to DAEMON_LIST, getting something like:

# Quill settings

#############################

QUILL_ENABLED = TRUE

QUILL_NAME = quill@$(FULL_HOSTNAME)

QUILL_DB_NAME = $(HOSTNAME)

QUILL_DB_QUERY_PASSWORD = reader

QUILL_DB_IP_ADDR = $(HOSTNAME):5432

QUILL_MANAGE_VACUUM = TRUE

DAEMON_LIST = MASTER, QUILL, SCHEDDFinally, put the writer passwd into /opt/glidecondor/condor_local/spool/.quillwritepassword:

echo "<writer passwd>" > /opt/glidecondor/condor_local/spool/.quillwritepassword

chown condor /opt/glidecondor/condor_local/spool/.quillwritepassword

chmod go-rwx /opt/glidecondor/condor_local/spool/.quillwritepassword